不是很清楚该怎么做

如果是 节点的 rke2-server

journalctl -f -u rke2-server

[root@kvm151 ~]# journalctl -f -u rke2-server

-- Logs begin at Mon 2024-08-26 21:52:24 EDT. --

8月 27 00:00:02 kvm151 rke2[869]: {"level":"info","ts":"2024-08-27T00:00:02.237879-0400","caller":"snapshot/v3_snapshot.go:65","msg":"created temporary db file","path":"/var/lib/rancher/rke2/server/db/snapshots/etcd-snapshot-kvm151-1724731202.part"}

8月 27 00:00:02 kvm151 rke2[869]: {"level":"info","ts":"2024-08-27T00:00:02.239663-0400","logger":"client","caller":"v3@v3.5.9-k3s1/maintenance.go:212","msg":"opened snapshot stream; downloading"}

8月 27 00:00:02 kvm151 rke2[869]: {"level":"info","ts":"2024-08-27T00:00:02.240378-0400","caller":"snapshot/v3_snapshot.go:73","msg":"fetching snapshot","endpoint":"https://127.0.0.1:2379"}

8月 27 00:00:02 kvm151 rke2[869]: {"level":"info","ts":"2024-08-27T00:00:02.341515-0400","logger":"client","caller":"v3@v3.5.9-k3s1/maintenance.go:220","msg":"completed snapshot read; closing"}

8月 27 00:00:02 kvm151 rke2[869]: {"level":"info","ts":"2024-08-27T00:00:02.353939-0400","caller":"snapshot/v3_snapshot.go:88","msg":"fetched snapshot","endpoint":"https://127.0.0.1:2379","size":"11 MB","took":"now"}

8月 27 00:00:02 kvm151 rke2[869]: {"level":"info","ts":"2024-08-27T00:00:02.354017-0400","caller":"snapshot/v3_snapshot.go:97","msg":"saved","path":"/var/lib/rancher/rke2/server/db/snapshots/etcd-snapshot-kvm151-1724731202"}

8月 27 00:00:02 kvm151 rke2[869]: time="2024-08-27T00:00:02-04:00" level=info msg="Saving snapshot metadata to /var/lib/rancher/rke2/server/db/.metadata/etcd-snapshot-kvm151-1724731202"

8月 27 00:00:02 kvm151 rke2[869]: time="2024-08-27T00:00:02-04:00" level=info msg="Applying snapshot retention=5 to local snapshots with prefix etcd-snapshot in /var/lib/rancher/rke2/server/db/snapshots"

8月 27 00:00:02 kvm151 rke2[869]: time="2024-08-27T00:00:02-04:00" level=info msg="Reconciling ETCDSnapshotFile resources"

8月 27 00:00:02 kvm151 rke2[869]: time="2024-08-27T00:00:02-04:00" level=info msg="Reconciliation of ETCDSnapshotFile resources complete"

如果是rancher 服务的日志

docker logs rancher

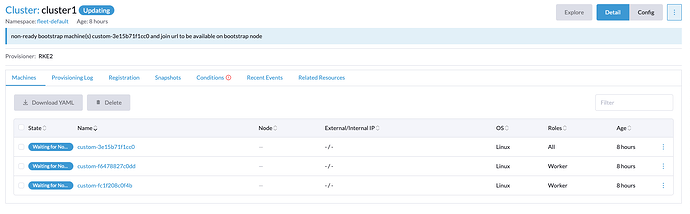

2024/08/27 06:33:49 [INFO] [planner] rkecluster fleet-default/cluster1: non-ready bootstrap machine(s) custom-3e15b71f1cc0 and join url to be available on bootstrap node

2024/08/27 06:34:23 [ERROR] Failed to install system chart fleet: failed to install , pod cattle-system/helm-operation-t6fbg exited 123

2024/08/27 06:34:27 [ERROR] Failed to install system chart fleet: failed to install , pod cattle-system/helm-operation-6s2jz exited 123

W0827 06:37:42.124782 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.187241 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195398 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195414 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195432 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195448 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195451 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195470 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195477 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195481 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195489 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195506 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195514 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195528 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195533 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195532 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195541 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195545 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195562 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.195566 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.597342 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.597369 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:42.597401 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:43.218582 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:43.241896 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:43.241916 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:43.242002 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:43.242019 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:43.242528 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:43.242536 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:43.242542 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:43.242555 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:44.008755 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:44.008773 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:44.008784 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:44.008807 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:44.008820 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:44.012071 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:44.012077 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:44.013187 61 transport.go:313] Unable to cancel request for *client.addQuery

W0827 06:37:44.023524 61 transport.go:313] Unable to cancel request for *client.addQuery

2024/08/27 06:38:35 [INFO] [planner] rkecluster fleet-default/cluster1: non-ready bootstrap machine(s) custom-3e15b71f1cc0 and join url to be available on bootstrap node

2024/08/27 06:38:35 [INFO] [planner] rkecluster fleet-default/cluster1: non-ready bootstrap machine(s) custom-3e15b71f1cc0 and join url to be available on bootstrap node

2024/08/27 06:39:23 [ERROR] Failed to install system chart fleet: failed to install , pod cattle-system/helm-operation-8gmbf exited 123

2024/08/27 06:39:27 [ERROR] Failed to install system chart fleet: failed to install , pod cattle-system/helm-operation-xcdkh exited 123

2024/08/27 06:43:40 [INFO] [planner] rkecluster fleet-default/cluster1: non-ready bootstrap machine(s) custom-3e15b71f1cc0 and join url to be available on bootstrap node

2024/08/27 06:43:40 [INFO] [planner] rkecluster fleet-default/cluster1: non-ready bootstrap machine(s) custom-3e15b71f1cc0 and join url to be available on bootstrap node

2024/08/27 06:43:51 [INFO] [planner] rkecluster fleet-default/cluster1: non-ready bootstrap machine(s) custom-3e15b71f1cc0 and join url to be available on bootstrap node

2024/08/27 06:44:22 [ERROR] Failed to install system chart fleet: failed to install , pod cattle-system/helm-operation-q5tkt exited 123

2024/08/27 06:44:26 [ERROR] Failed to install system chart fleet: failed to install , pod cattle-system/helm-operation-64xk6 exited 123

2024/08/27 06:48:46 [INFO] [planner] rkecluster fleet-default/cluster1: non-ready bootstrap machine(s) custom-3e15b71f1cc0 and join url to be available on bootstrap node

2024/08/27 06:48:46 [INFO] [planner] rkecluster fleet-default/cluster1: non-ready bootstrap machine(s) custom-3e15b71f1cc0 and join url to be available on bootstrap node

2024/08/27 06:49:19 [ERROR] error syncing 'cattle-fleet-system/helm-operation-6wvjg': handler helm-operation: Operation cannot be fulfilled on operations.catalog.cattle.io "helm-operation-6wvjg": StorageError: invalid object, Code: 4, Key: /registry/catalog.cattle.io/operations/cattle-fleet-system/helm-operation-6wvjg, ResourceVersion: 0, AdditionalErrorMsg: Precondition failed: UID in precondition: 4eb73d43-8a6b-4551-be3c-3e84fdb5616d, UID in object meta: , requeuing

2024/08/27 06:49:23 [ERROR] Failed to install system chart fleet: failed to install , pod cattle-system/helm-operation-6qxdj exited 123

2024/08/27 06:49:27 [ERROR] Failed to install system chart fleet: failed to install , pod cattle-system/helm-operation-vmdhc exited 123

2024/08/27 06:53:53 [INFO] [planner] rkecluster fleet-default/cluster1: non-ready bootstrap machine(s) custom-3e15b71f1cc0 and join url to be available on bootstrap node

2024/08/27 06:53:53 [INFO] [planner] rkecluster fleet-default/cluster1: non-ready bootstrap machine(s) custom-3e15b71f1cc0 and join url to be available on bootstrap node

2024/08/27 06:53:53 [INFO] [planner] rkecluster fleet-default/cluster1: non-ready bootstrap machine(s) custom-3e15b71f1cc0 and join url to be available on bootstrap node

如果是rancher-system-agent

journalctl -f -u rancher-system-agent

-- Logs begin at Mon 2024-08-26 21:52:24 EDT. --

8月 27 02:49:15 kvm151 rancher-system-agent[4573]: W0827 02:49:15.399624 4573 reflector.go:443] pkg/mod/github.com/rancher/client-go@v1.24.0-rancher1/tools/cache/reflector.go:168: watch of *v1.Secret ended with: an error on the server ("unable to decode an event from the watch stream: stream error: stream ID 143; INTERNAL_ERROR; received from peer") has prevented the request from succeeding

8月 27 02:50:45 kvm151 rancher-system-agent[4573]: W0827 02:50:45.937104 4573 reflector.go:443] pkg/mod/github.com/rancher/client-go@v1.24.0-rancher1/tools/cache/reflector.go:168: watch of *v1.Secret ended with: an error on the server ("unable to decode an event from the watch stream: stream error: stream ID 147; INTERNAL_ERROR; received from peer") has prevented the request from succeeding

8月 27 02:52:43 kvm151 rancher-system-agent[4573]: W0827 02:52:43.055210 4573 reflector.go:443] pkg/mod/github.com/rancher/client-go@v1.24.0-rancher1/tools/cache/reflector.go:168: watch of *v1.Secret ended with: an error on the server ("unable to decode an event from the watch stream: stream error: stream ID 151; INTERNAL_ERROR; received from peer") has prevented the request from succeeding

8月 27 02:53:53 kvm151 rancher-system-agent[4573]: time="2024-08-27T02:53:53-04:00" level=info msg="[Applyinator] No image provided, creating empty working directory /var/lib/rancher/agent/work/20240827-025353/56c57b7165a002240d397da3e12a16dee7e36762938262947feaf3b178560f77_0"

8月 27 02:53:53 kvm151 rancher-system-agent[4573]: time="2024-08-27T02:53:53-04:00" level=info msg="[Applyinator] Running command: sh [-c rke2 etcd-snapshot list --etcd-s3=false 2>/dev/null]"

8月 27 02:53:53 kvm151 rancher-system-agent[4573]: time="2024-08-27T02:53:53-04:00" level=info msg="[56c57b7165a002240d397da3e12a16dee7e36762938262947feaf3b178560f77_0:stdout]: Name Location Size Created"

8月 27 02:53:53 kvm151 rancher-system-agent[4573]: time="2024-08-27T02:53:53-04:00" level=info msg="[56c57b7165a002240d397da3e12a16dee7e36762938262947feaf3b178560f77_0:stdout]: etcd-snapshot-kvm151-1724716802 file:///var/lib/rancher/rke2/server/db/snapshots/etcd-snapshot-kvm151-1724716802 18128928 2024-08-26T20:00:02-04:00"

8月 27 02:53:53 kvm151 rancher-system-agent[4573]: time="2024-08-27T02:53:53-04:00" level=info msg="[56c57b7165a002240d397da3e12a16dee7e36762938262947feaf3b178560f77_0:stdout]: etcd-snapshot-kvm151-1724731202 file:///var/lib/rancher/rke2/server/db/snapshots/etcd-snapshot-kvm151-1724731202 10928160 2024-08-27T00:00:02-04:00"

8月 27 02:53:53 kvm151 rancher-system-agent[4573]: time="2024-08-27T02:53:53-04:00" level=info msg="[Applyinator] Command sh [-c rke2 etcd-snapshot list --etcd-s3=false 2>/dev/null] finished with err: <nil> and exit code: 0"

8月 27 02:53:53 kvm151 rancher-system-agent[4573]: time="2024-08-27T02:53:53-04:00" level=info msg="[K8s] updated plan secret fleet-default/custom-3e15b71f1cc0-machine-plan with feedback"

8月 27 02:56:14 kvm151 rancher-system-agent[4573]: W0827 02:56:14.496248 4573 reflector.go:443] pkg/mod/github.com/rancher/client-go@v1.24.0-rancher1/tools/cache/reflector.go:168: watch of *v1.Secret ended with: an error on the server ("unable to decode an event from the watch stream: stream error: stream ID 155; INTERNAL_ERROR; received from peer") has prevented the request from succeeding