部署方案:

| hostname | 服务器 | 用途 | config.yaml配置 | 备注 |

|---|---|---|---|---|

| master1 | 192.168.16.2 | rke2 server节点 | server无 | server节点通过nginx进行负载均衡 |

| master2 | 192.168.16.3 | rke2 server节点 | server:https://192.168.1.7:9435 | server节点通过nginx进行负载均衡 |

| master3 | 192.168.16.4 | rke2 server节点 | server:https://192.168.1.7:9435 | server节点通过nginx进行负载均衡 |

| node1 | 192.168.16.5 | rke2 agent节点 | server:https://192.168.1.7:9435 | |

| node2 | 192.168.16.6 | rke2 agent节点 | server:https://192.168.1.7:9435 | |

| other1 | 192.168.16.68 | nginx、rancher单节点 | rke2 server负载均衡、docker+rancher单节点 |

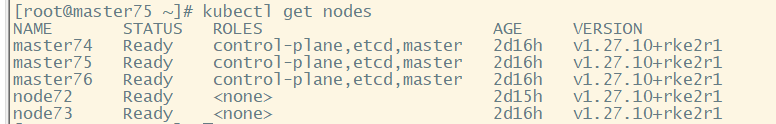

部署后集群启动正常

单节点rancher和nginx部署在同一台主机,master节点在部署时server地址为nginx服务地址ip,现在的问题是,这样的部署方式,master节点cattle-cluster-agent连接不上rancher,具体错误如下:

time="2024-03-11T01:21:06Z" level=info msg="Connecting to wss://192.168.16.68:8443/v3/connect/register with token starting with 6jdnv22qfcj7zf5g4xjjxh6r69h"

time="2024-03-11T01:21:06Z" level=info msg="Connecting to proxy" url="wss://192.168.16.68:8443/v3/connect/register"

time="2024-03-11T01:21:06Z" level=error msg="Failed to connect to proxy. Response status: 400 - 400 Bad Request. Response body: cluster not found" error="websocket: bad handshake"

time="2024-03-11T01:21:06Z" level=error msg="Remotedialer proxy error" error="websocket: bad handshake"

请问这种情况要怎么处理,是不是rke2的bug? 如果部署master节点,master节点server地址为已存活master节点ip地址时,则cattle-cluster-agent

可以正常连接到rancher,当master节点config.yaml server地址为代理服务器地址时,则cattle-cluster-agent连接不上rancher。