奥,好的,,我再试试

May 25 11:28:48 k8stest-10.novalocal rancher-system-agent[4918]: time=“2023-05-25T11:28:48+08:00” level=warning msg=“Failed to get image from endpoint: Get “https://194.1.1.107:8888/service/token?scope=repository%3Arancher%2Fsystem-agent-installer-rke2%3Apull&service=harbor-registry”: http: server gave HTTP response to HTTPS client”

May 25 11:28:48 k8stest-10.novalocal rancher-system-agent[4918]: time=“2023-05-25T11:28:48+08:00” level=warning msg=“Failed to get image from endpoint: Get “https://194.1.1.107:8888/v2/”: http: server gave HTTP response to HTTPS client”

May 25 11:28:48 k8stest-10.novalocal rancher-system-agent[4918]: time=“2023-05-25T11:28:48+08:00” level=error msg=“error while staging: all endpoints failed: Get “https://194.1.1.107:8888/service/token?scope=repository%3Arancher%2Fsystem-agent-installer-rke2%3Apull&service=harbor-registry”: http: server gave HTTP response to HTTPS client; Get “https://194.1.1.107:8888/v2/”: http: server gave HTTP response to HTTPS client: failed to get image 194.1.1.107:8888/rancher/system-agent-installer-rke2:v1.25.7-rke2r1”

May 25 11:28:48 k8stest-10.novalocal rancher-system-agent[4918]: time=“2023-05-25T11:28:48+08:00” level=error msg=“error executing instruction 0: all endpoints failed: Get “https://194.1.1.107:8888/service/token?scope=repository%3Arancher%2Fsystem-agent-installer-rke2%3Apull&service=harbor-registry”: http: server gave HTTP response to HTTPS client; Get “https://194.1.1.107:8888/v2/”: http: server gave HTTP response to HTTPS client: failed to get image 194.1.1.107:8888/rancher/system-agent-installer-rke2:v1.25.7-rke2r1”

May 25 11:28:48 k8stest-10.novalocal rancher-system-agent[4918]: time=“2023-05-25T11:28:48+08:00” level=error msg=“error encountered during parsing of last run time: parsing time “” as “Mon Jan _2 15:04:05 MST 2006”: cannot parse “” as “Mon””

May 25 11:28:48 k8stest-10.novalocal rancher-system-agent[4918]: time=“2023-05-25T11:28:48+08:00” level=info msg="[Applyinator] No image provided, creating empty working directory /var/lib/rancher/agent/work/20230525-112848/6db820479a9f44aa4371f932dd5c7f95719abd0cb2fc6da8a72fc9d9aaea10f0_0"

还是报错 http: server gave HTTP response to HTTPS client ![]()

- 你到 rke2 节点上执行:

cat /etc/rancher/rke2/registries.yaml | jq .

cat /etc/rancher/rke2/config.yaml.d/50-rancher.yaml

这样能查看到最终落实到 rke2 中的 配置

- 在 rancher ui 通过 yaml 编辑集群,然后提供关于 容器镜像仓库的配置信息

1.root@k8stest-10 ~]# cat /etc/rancher/rke2/registries.yaml

{“configs”:{“194.1.1.107:8888”:{“auth”:{“username”:“admin”,“password”:“Wj123456”,“auth”:"",“identity_token”:""},“tls”:null}},“mirrors”:{“194.1.1.107:8888”:{“endpoint”:[“http://194.1.1.107:8888”]}}}[root@k8stest-10 ~]#

[root@k8stest-10 ~]# cat /etc/rancher/rke2/config.yaml.d/50-rancher.yaml

{

“agent-token”: “8hjw9d7h55vsm2d6cjv4lr7dvpktcnf25bhkjr5mpql6tw4zr8ml8k”,

“cni”: “calico”,

“disable-kube-proxy”: false,

“etcd-expose-metrics”: false,

“etcd-snapshot-retention”: 5,

“etcd-snapshot-schedule-cron”: “0 */5 * * *”,

“kube-controller-manager-arg”: [

“cert-dir=/var/lib/rancher/rke2/server/tls/kube-controller-manager”,

“secure-port=10257”

],

“kube-controller-manager-extra-mount”: [

“/var/lib/rancher/rke2/server/tls/kube-controller-manager:/var/lib/rancher/rke2/server/tls/kube-controller-manager”

],

“kube-scheduler-arg”: [

“cert-dir=/var/lib/rancher/rke2/server/tls/kube-scheduler”,

“secure-port=10259”

],

“kube-scheduler-extra-mount”: [

“/var/lib/rancher/rke2/server/tls/kube-scheduler:/var/lib/rancher/rke2/server/tls/kube-scheduler”

],

“node-label”: [

“cattle.io/os=linux”,

“rke.cattle.io/machine=5e17205d-2638-4f38-8c84-edcd3cd2cae5”

],

“node-taint”: [

“node-role.kubernetes.io/control-plane:NoSchedule”,

“node-role.kubernetes.io/etcd:NoExecute”

],

“private-registry”: “/etc/rancher/rke2/registries.yaml”,

“protect-kernel-defaults”: false,

“system-default-registry”: “194.1.1.107:8888”,

“token”: “cm8pn6csdh976mpjnvl5gmc5cn2k7tnxd8b7wgz5wdz4r92mnlxrsm”

}

2.apiVersion: provisioning.cattle.io/v1

kind: Cluster

metadata:

annotations:

field.cattle.io/creatorId: user-n29vw

field.cattle.io/description: mc

creationTimestamp: ‘2023-05-25T03:28:42Z’

finalizers:

- wrangler.cattle.io/cloud-config-secret-remover

- wrangler.cattle.io/provisioning-cluster-remove

- wrangler.cattle.io/rke-cluster-remove

generation: 1

managedFields:

- apiVersion: provisioning.cattle.io/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:finalizers:

.: {}

v:“wrangler.cattle.io/cloud-config-secret-remover”: {}

manager: rancher-v2.7.3-secret-migrator

operation: Update

time: ‘2023-05-25T03:28:42Z’

- apiVersion: provisioning.cattle.io/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:field.cattle.io/description: {}

f:finalizers:

v:“wrangler.cattle.io/provisioning-cluster-remove”: {}

v:“wrangler.cattle.io/rke-cluster-remove”: {}

f:spec:

.: {}

f:defaultPodSecurityAdmissionConfigurationTemplateName: {}

f:defaultPodSecurityPolicyTemplateName: {}

f:kubernetesVersion: {}

f:localClusterAuthEndpoint:

.: {}

f:caCerts: {}

f:enabled: {}

f:fqdn: {}

f:rkeConfig:

.: {}

f:chartValues:

.: {}

f:rke2-calico: {}

f:etcd:

.: {}

f:disableSnapshots: {}

f:s3: {}

f:snapshotRetention: {}

f:snapshotScheduleCron: {}

f:machineGlobalConfig:

.: {}

f:cni: {}

f:disable-kube-proxy: {}

f:etcd-expose-metrics: {}

f:machinePools: {}

f:machineSelectorConfig: {}

f:registries:

.: {}

f:configs:

.: {}

f:194.1.1.107:8888:

.: {}

f:authConfigSecretName: {}

f:caBundle: {}

f:insecureSkipVerify: {}

f:tlsSecretName: {}

f:mirrors:

.: {}

f:194.1.1.107:8888:

.: {}

f:endpoint: {}

f:rewrite: {}

f:upgradeStrategy:

.: {}

f:controlPlaneConcurrency: {}

f:controlPlaneDrainOptions:

.: {}

f:deleteEmptyDirData: {}

f:disableEviction: {}

f:enabled: {}

f:force: {}

f:gracePeriod: {}

f:ignoreDaemonSets: {}

f:skipWaitForDeleteTimeoutSeconds: {}

f:timeout: {}

f:workerConcurrency: {}

f:workerDrainOptions:

.: {}

f:deleteEmptyDirData: {}

f:disableEviction: {}

f:enabled: {}

f:force: {}

f:gracePeriod: {}

f:ignoreDaemonSets: {}

f:skipWaitForDeleteTimeoutSeconds: {}

f:timeout: {}

manager: rancher

operation: Update

time: ‘2023-05-25T03:28:43Z’

- apiVersion: provisioning.cattle.io/v1

fieldsType: FieldsV1

fieldsV1:

f:status:

.: {}

f:clusterName: {}

f:conditions: {}

f:observedGeneration: {}

manager: rancher

operation: Update

subresource: status

time: ‘2023-05-25T03:28:43Z’

name: miniocluster

namespace: fleet-default

resourceVersion: ‘11387319’

uid: eb6c2e66-bb87-459c-a8bc-2ebe3022a813

spec:

kubernetesVersion: v1.25.7+rke2r1

localClusterAuthEndpoint: {}

rkeConfig:

chartValues:

rke2-calico: {}

etcd:

snapshotRetention: 5

snapshotScheduleCron: 0 */5 * * *

machineGlobalConfig:

cni: calico

disable-kube-proxy: false

etcd-expose-metrics: false

machineSelectorConfig:

- config:

protect-kernel-defaults: false

registries:

configs:

194.1.1.107:8888:

authConfigSecretName: registryconfig-auth-6ndzv

mirrors:

194.1.1.107:8888:

endpoint:

- http://194.1.1.107:8888

upgradeStrategy:

controlPlaneConcurrency: ‘1’

controlPlaneDrainOptions:

deleteEmptyDirData: true

disableEviction: false

enabled: false

force: false

gracePeriod: -1

ignoreDaemonSets: true

ignoreErrors: false

postDrainHooks: null

preDrainHooks: null

skipWaitForDeleteTimeoutSeconds: 0

timeout: 120

workerConcurrency: ‘1’

workerDrainOptions:

deleteEmptyDirData: true

disableEviction: false

enabled: false

force: false

gracePeriod: -1

ignoreDaemonSets: true

ignoreErrors: false

postDrainHooks: null

preDrainHooks: null

skipWaitForDeleteTimeoutSeconds: 0

timeout: 120

status:

clusterName: c-m-sxlsgtbs

conditions:

- lastUpdateTime: ‘2023-05-25T03:27:38Z’

reason: Reconciling

status: ‘True’

type: Reconciling

- lastUpdateTime: ‘2023-05-25T03:27:38Z’

status: ‘False’

type: Stalled

- lastUpdateTime: ‘2023-05-25T03:28:07Z’

status: ‘True’

type: Created

- lastUpdateTime: ‘2023-05-25T05:38:11Z’

status: ‘True’

type: RKECluster

- lastUpdateTime: ‘2023-05-25T03:27:38Z’

status: ‘True’

type: BackingNamespaceCreated

- lastUpdateTime: ‘2023-05-25T03:27:39Z’

status: ‘True’

type: DefaultProjectCreated

- lastUpdateTime: ‘2023-05-25T03:27:39Z’

status: ‘True’

type: SystemProjectCreated

- lastUpdateTime: ‘2023-05-25T03:27:39Z’

status: ‘True’

type: InitialRolesPopulated

- lastUpdateTime: ‘2023-05-25T03:27:40Z’

status: ‘True’

type: CreatorMadeOwner

- lastUpdateTime: ‘2023-05-25T03:27:41Z’

status: ‘True’

type: NoDiskPressure

- lastUpdateTime: ‘2023-05-25T03:27:41Z’

status: ‘True’

type: NoMemoryPressure

- lastUpdateTime: ‘2023-05-25T03:27:42Z’

status: ‘True’

type: SecretsMigrated

- lastUpdateTime: ‘2023-05-25T03:27:42Z’

status: ‘True’

type: ServiceAccountSecretsMigrated

- lastUpdateTime: ‘2023-05-25T03:27:42Z’

status: ‘True’

type: RKESecretsMigrated

- lastUpdateTime: ‘2023-05-25T03:27:53Z’

status: ‘True’

type: Provisioned

- lastUpdateTime: ‘2023-05-25T03:27:52Z’

status: ‘False’

type: Connected

- lastUpdateTime: ‘2023-05-25T05:38:11Z’

message: waiting for viable init node

reason: Waiting

status: Unknown

type: Updated

- lastUpdateTime: ‘2023-05-25T03:28:07Z’

message: Cluster agent is not connected

reason: Disconnected

status: ‘False’

type: Ready

observedGeneration: 1

看你的配置真是一点问题都没有,你确定你是在修改了集群配置之后,重新 执行的 注册节点的命令?

是的,以前很丝滑,2.2的版本好像,最近想再试试,结果一步一个坑。docker24.0.1单机安装rancher:v2.6.12也是不行 Get “https://127.0.0.1:6444/version?timeout=15m0s”: dial tcp 127.0.0.1:6444: connect: connection refused,,redhat8.5 ![]()

docker run -d --restart=unless-stopped -p 80:80 -p 443:443 --privileged rancher/rancher:v2.6.12

INFO: Running k3s server --cluster-init --cluster-reset 2023/05/25 06:25:37 [INFO] Rancher version v2.6.12 (4e6a3cbed) is starting 2023/05/25 06:25:37 [INFO] Rancher arguments {ACMEDomains:[] AddLocal:true Embedded:false BindHost: HTTPListenPort:80 HTTPSListenPort:443 K8sMode:auto Debug:false Trace:false NoCACerts:false AuditLogPath:/var/log/auditlog/rancher-api-audit.log AuditLogMaxage:10 AuditLogMaxsize:100 AuditLogMaxbackup:10 AuditLevel:0 Features: ClusterRegistry:} 2023/05/25 06:25:37 [INFO] Listening on /tmp/log.sock 2023/05/25 06:25:37 [INFO] Waiting for server to become available: Get "https://127.0.0.1:6444/version?timeout=15m0s": dial tcp 127.0.0.1:6444: connect: connection refused 2023/05/25 06:25:39 [INFO] Waiting for server to become available: Get "https://127.0.0.1:6444/version?timeout=15m0s": dial tcp 127.0.0.1:6444: connect: connection refused 2023/05/25 06:25:41 [INFO] Waiting for server to become available: Get "https://127.0.0.1:6444/version?timeout=15m0s": dial tcp 127.0.0.1:6444: connect: connection refused 2023/05/25 06:25:43 [INFO] Waiting for server to become available: Get "https://127.0.0.1:6444/version?timeout=15m0s": dial tcp 127.0.0.1:6444: connect: connection refused 2023/05/25 06:25:45 [INFO] Waiting for server to become available: Get "https://127.0.0.1:6444/version?timeout=15m0s": dial tcp 127.0.0.1:6444: connect: connection refused 2023/05/25 06:25:47 [INFO] Waiting for server to become available: Get "https://127.0.0.1:6444/version?timeout=15m0s": dial tcp 127.0.0.1:6444: connect: connection refused 2023/05/25 06:25:49 [INFO] Waiting for server to become available: Get "https://127.0.0.1:6444/version?timeout=15m0s": dial tcp 127.0.0.1:6444: connect: connection refused 2023/05/25 06:25:51 [INFO] Waiting for server to become available: Get "https://127.0.0.1:6444/version?timeout=15m0s": dial tcp 127.0.0.1:6444: connect: connection refused 2023/05/25 06:25:58 [FATAL] k3s exited with: exit status 2

哎,你这是另外的问题,没必要和镜像仓库的这个问题混在一起,这不乱套了么,如果你需要,你可以重新创建一个话题

再给个建议,下次提交问题的时候,像日志啊,命令行或者输出啊,最好用 markdown 语法,论坛是支持 markdown 语法的,方便别人的同时也在方便自己。

好的

那这个问题算是bug么???还是有解决办法?

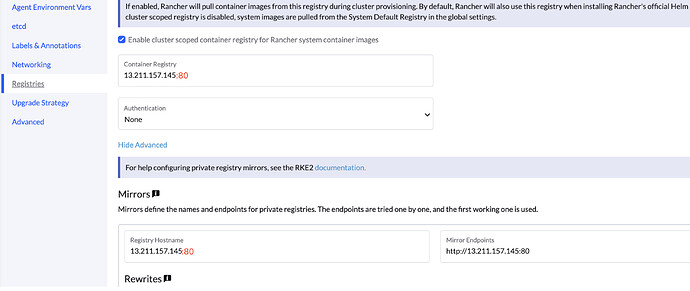

算是 bug,有两个解决方法:

-

你可以将 harbor 的 http 端口改成 80,然后再按照类似下面图片中的方式去配置:

-

你可以将 harbor 改成 https,然后 在链接 harbor 的时候选中 跳过证书验证即可。

感谢大佬支持,目前rke2已经安装成功,感谢

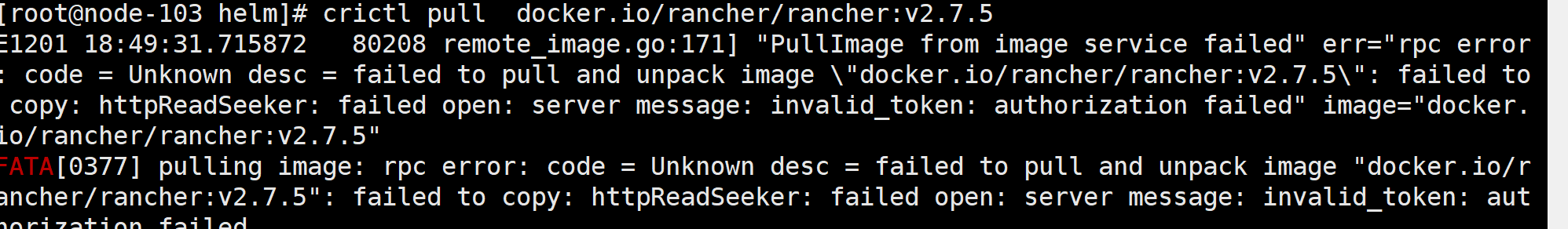

我现在遇到的问题类似rancher 2.7.3,rke2 部署的,但是containerd不能从http的harbor拉取镜像,总是走443.很头疼

上面例子写的明白明白了

http只能用80端口,然后进行一次mirror,以前很丝滑的,现在不知道是为了安全哈是啥,难度高了

使用非80端口的非安全私有镜像仓库,mirror 中 Registry Hostname 不配置端口号,具体的端口号在endpoint url中指定,例如设置/etc/rancher/rke2/registries.yaml

mirrors:

192.168.1.10:

endpoint:

- "http://192.168.1.10:8080"

configs:

"192.168.1.10:8080":

auth:

username: "admin"

password: "xxxxxxx"

PS: 这里需要注意,如果镜像仓库需要配置认证信息,configs中的Registry Hostname 要与endpoint url 保持一致

配置完成后,system default registry url要指向mirror 中 Registry Hostname值,例如在 /etc/rancher/rke2/config.yaml 中配置

system-default-registry: "192.168.1.10"

能留个QQ吗,我快被RE2干蒙了

mirrors:

#docker.io:

192.168.177.130:8080:

endpoint:

- "http://192.168.177.130:8080"

configs:

"192.168.177.130:8080":

auth:

username: admin

password: Harborx

tls:

insecure_skip_verify: true

大佬,这个是哪里配置的,我这个 2.8.5 版本,run 起来后,也是去 https 找

Failed to pull image “harbor01.io:8083/rancher/shell:v0.1.24”: failed to pull and unpack image “harbor01.io:8083/rancher/shell:v0.1.24”: failed to resolve reference “harbor01.io:8083/rancher/shell:v0.1.24”: failed to do request: Head “https://harbor01.io:8083/v2/rancher/shell/manifests/v0.1.24”: dial tcp: lookup harbor01.io: no such host