环境介绍

1.http://file.mydemo.com 是本地文件下载服务器,所有离线包都在上面

2.虚拟机模板网卡名标准化为eth0

3.本地私有harbor仓库地址为 http://harbor.demo.com.cn

4.https://rancher.demo.com.cn 是提前部署好的rancher的地址

| 主机名 | IP地址 | 节点角色 | 安装组件 |

|---|---|---|---|

| node001 | 10.10.10.21 | master | k3s |

| node002 | 10.10.10.22 | ingress+agent | k3s |

| node003 | 10.10.10.23 | ingress+agent | k3s |

| node004 | 10.10.10.24 | agent | k3s |

| node005 | 10.10.10.25 | agent | k3s |

master节点操作

#创建配置文件,指定pod网段和svc网段等

mkdir -p /etc/rancher/k3s/

cat > /etc/rancher/k3s/config.yaml <<EOF

write-kubeconfig-mode: 644

write-kubeconfig: /root/.kube/config

token: "123456"

cluster-cidr: 192.168.0.0/16

service-cidr: 172.20.0.0/16

disable-network-policy: true

disable: traefik,coredns,local-storage

kubelet-arg: eviction-hard=imagefs.available<25%,memory.available<25%,nodefs.available<25%

node-name: IPV4_ADDR

EOF

#获取eth0的ip地址,替换上面的node-name的值

IPADDR=$(ifconfig eth0 |grep inet |grep netmask |awk '{print $2}')

sed -i "s#IPV4_ADDR#$IPADDR#g" /etc/rancher/k3s/config.yaml

master节点继续执行如下命令下载离线包和脚本执行安装

curl http://file.mydemo.com/tools/k3s/v1.20.15-k3s1/k3s -o /usr/local/bin/k3s

chmod +x /usr/local/bin/k3s

mkdir -p /var/lib/rancher/k3s/agent/images/

curl http://file.mydemo.com/tools/k3s/v1.20.15-k3s1/k3s-airgap-images-amd64.tar -o /var/lib/rancher/k3s/agent/images/k3s-airgap-images-amd64.tar

cd /root/

wget http://file.mydemo.com/tools/k3s/k3s-install.sh

chmod +x k3s-install.sh

INSTALL_K3S_SKIP_DOWNLOAD=true K3S_CONFIG_FILE=/etc/rancher/k3s/config.yaml ./k3s-install.sh

node节点执行

mkdir -p /etc/rancher/k3s/

cat > /etc/rancher/k3s/config.yaml <<EOF

kubelet-arg: eviction-hard=imagefs.available<15%,memory.available<10%,nodefs.available<15%

node-name: IPV4_ADDR

EOF

IPADDR=$(ifconfig eth0 |grep inet |grep netmask |awk '{print $2}')

sed -i "s#IPV4_ADDR#$IPADDR#g" /etc/rancher/k3s/config.yaml

curl http://file.mydemo.com/tools/k3s/v1.20.15-k3s1/k3s -o /usr/local/bin/k3s

chmod +x /usr/local/bin/k3s

mkdir -p /var/lib/rancher/k3s/agent/images/

curl http://file.mydemo.com/tools/k3s/v1.20.15-k3s1/k3s-airgap-images-amd64.tar -o /var/lib/rancher/k3s/agent/images/k3s-airgap-images-amd64.tar

cd /root/

wget http://file.mydemo.com/tools/k3s/k3s-install.sh

chmod +x k3s-install.sh

INSTALL_K3S_SKIP_DOWNLOAD=true K3S_TOKEN=123456 K3S_URL=https://10.10.10.21:6443 K3S_CONFIG_FILE=/etc/rancher/k3s/config.yaml ./k3s-install.sh

设置私有仓库

所有节点执行

配置本地仓库,使用test -e命令判断文件存在才执行后面的命令

mkdir -p /etc/rancher/k3s/

cat > /etc/rancher/k3s/registries.yaml <<EOF

mirrors:

"harbor.demo.com.cn":

endpoint:

- "http://harbor.demo.com.cn"

"docker.io":

endpoint:

- "http://harbor.demo.com.cn"

configs:

"harbor.demo.com.cn":

tls:

insecure_skip_verify: true

EOF

test -e /etc/systemd/system/k3s.service && systemctl restart k3s

test -e /etc/systemd/system/k3s-agent.service && systemctl restart k3s-agent

设置lb节点

设置2个节点作为lb的专用节点

kubectl label node 10.10.10.22 svccontroller.k3s.cattle.io/enablelb=true

kubectl label node 10.10.10.23 svccontroller.k3s.cattle.io/enablelb=true

kubectl taint nodes 10.10.10.22 node-role.kubernetes.io/control-plane=svclb:NoSchedule

kubectl taint nodes 10.10.10.23 node-role.kubernetes.io/control-plane=svclb:NoSchedule

打个标签

kubectl label node 10.10.10.22 app=ingress

kubectl label node 10.10.10.23 app=ingress

安装ingress

下面ingress文件是基于官方修改的,主要修改的内容如下

1、能容忍任何污点

2、同时加了标签选择器,只选择具有app=ingress的节点

3、同时设置了hostNetwork=true,监听宿主机80端口

4、deployment改成了daemonset

kubectl apply -f http://file.mydemo.com/tools/k8s/ingress-nginx/v1.1.1/ingress-nginx-controller-v1.1.1/deploy/static/provider/baremetal/deploy-taint.yaml

安装coredns

coredns修改过了,主要内容如下

1、副本数改成了2

2、config-volume去掉了下面2行

- key: NodeHosts

path: NodeHosts

kubectl apply -f http://file.mydemo.com/tools/k3s/v1.20.15-k3s1/coredns.yml

检查

[root@node001 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.10.10.21 Ready control-plane,master 13m v1.20.15+k3s1

10.10.10.23 Ready <none> 12m v1.20.15+k3s1

10.10.10.22 Ready <none> 12m v1.20.15+k3s1

10.10.10.24 Ready <none> 12m v1.20.15+k3s1

10.10.10.25 Ready <none> 12m v1.20.15+k3s1

[root@node001 ~]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system metrics-server-86cbb8457f-8nls9 1/1 Running 0 13m 192.168.0.2 10.10.10.21 <none> <none>

ingress-nginx ingress-nginx-admission-create-fc9z7 0/1 Completed 0 2m43s 192.168.3.2 10.10.10.24 <none> <none>

ingress-nginx ingress-nginx-admission-patch-9mlxl 0/1 Completed 1 2m43s 192.168.4.2 10.10.10.25 <none> <none>

ingress-nginx ingress-nginx-controller-9dtt2 1/1 Running 0 2m43s 10.10.10.23 10.10.10.23 <none> <none>

ingress-nginx ingress-nginx-controller-glk6k 1/1 Running 0 2m43s 10.10.10.22 10.10.10.22 <none> <none>

kube-system coredns-86cd6898c-pmg7q 1/1 Running 0 29s 192.168.3.3 10.10.10.24 <none> <none>

kube-system coredns-86cd6898c-kc9qr 1/1 Running 0 29s 192.168.4.3 10.10.10.25 <none> <none>

[root@node001 ~]#

模拟业务pod

kubectl apply -f http://file.mydemo.com/tools/k8s-yaml/nginx.yaml

nginx.yaml文件内容

kind: Deployment

apiVersion: apps/v1

metadata:

name: nginx

labels:

app: nginx

spec:

replicas: 8

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: harbor.demo.com.cn/nginx/nginx:stable

imagePullPolicy: IfNotPresent

---

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

type: LoadBalancer

ports:

- port: 8080

name: nginx

targetPort: 80

selector:

app: nginx

浏览器访问OK

查看nat表默认的iptables规则数量

[root@node002 ~]# iptables -L -n -t nat |wc -l

256

[root@node002 ~]#

把集群导入到rancher

curl --insecure -sfL https://rancher.demo.com.cn/v3/import/cfdl7rnc5gsvn2fsz8w4vrdxqrjn5mxgwtv45nqbssfvw8mxsdgbqc_c-gqxdp.yaml | kubectl apply -f -

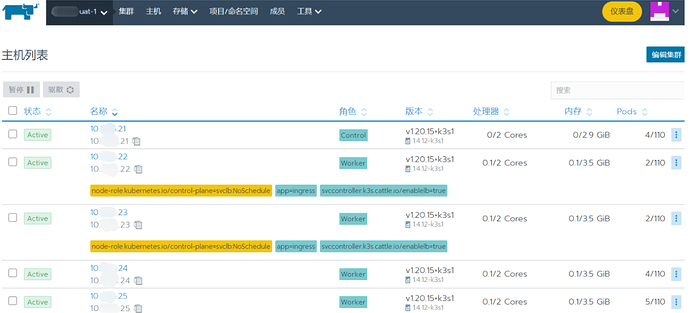

导入后正常

查看

[root@node001 ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system metrics-server-86cbb8457f-8nls9 1/1 Running 0 59m

ingress-nginx ingress-nginx-admission-create-fc9z7 0/1 Completed 0 48m

ingress-nginx ingress-nginx-admission-patch-9mlxl 0/1 Completed 1 48m

ingress-nginx ingress-nginx-controller-9dtt2 1/1 Running 0 48m

ingress-nginx ingress-nginx-controller-glk6k 1/1 Running 0 48m

kube-system coredns-86cd6898c-pmg7q 1/1 Running 0 45m

kube-system coredns-86cd6898c-kc9qr 1/1 Running 0 45m

default nginx-5868b9c5cb-dwklw 1/1 Running 0 31m

default nginx-5868b9c5cb-ts7ns 1/1 Running 0 31m

default nginx-5868b9c5cb-jrvqw 1/1 Running 0 30m

default nginx-5868b9c5cb-r4whn 1/1 Running 0 30m

default nginx-5868b9c5cb-xzlwv 1/1 Running 0 30m

default nginx-5868b9c5cb-b26gh 1/1 Running 0 30m

default nginx-5868b9c5cb-9tvrt 1/1 Running 0 30m

default nginx-5868b9c5cb-nqkpg 1/1 Running 0 30m

default svclb-nginx-5f499 1/1 Running 0 20m

default svclb-nginx-wdhfq 1/1 Running 0 20m

cattle-system cattle-cluster-agent-78698f9b5d-qthsq 1/1 Running 0 7m55s

fleet-system fleet-agent-5b84678594-b97fs 1/1 Running 0 7m33s

[root@node001 ~]#

此时ipvs没规则,正常

[root@node001 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@node001 ~]#

lb节点的iptables规则

[root@node002 ~]# iptables -L -n -t nat |wc -l

256

[root@node002 ~]# iptables -L -n |wc -l

41

[root@node002 ~]#

切换为ipvs模式

主要步骤是过滤盘点ipvs参数是否存在,如果不存在,就给添加一条配置

kube-proxy-arg: proxy-mode=ipvs

同时把iptables那些nat和filter链表规则清空删除了,init-iptables.txt内容如下

因为那些链表是iptables模式下自动创建的,iptables -F只能删除规则,不能删除链。所以干脆把表恢复默认的

# Generated by iptables-save v1.4.21 on Sun Jun 12 20:06:01 2022

*nat

:PREROUTING ACCEPT [0:0]

:INPUT ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

:POSTROUTING ACCEPT [0:0]

COMMIT

# Completed on Sun Jun 12 20:06:01 2022

# Generated by iptables-save v1.4.21 on Sun Jun 12 20:06:01 2022

*filter

:INPUT ACCEPT [225:17568]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [184:39537]

COMMIT

# Completed on Sun Jun 12 20:06:01 2022

touch /etc/rancher/k3s/config.yaml

IPVS_FLAG=$(grep ipvs /etc/rancher/k3s/config.yaml |wc -l)

if [[ $IPVS_FLAG -eq 0 ]]; then

echo "kube-proxy-arg: proxy-mode=ipvs">>/etc/rancher/k3s/config.yaml

wget http://file.mydemo.com/tools/k8s/init-iptables.txt && iptables-restore < init-iptables.txt

test -e /etc/systemd/system/k3s.service && systemctl restart k3s

test -e /etc/systemd/system/k3s-agent.service && systemctl restart k3s-agent

fi

执行完毕

10.10.10.23节点如下

[root@node003 ~]# iptables -L -n -t nat |wc -l

55

[root@node003 ~]# ipvsadm -L -n |wc -l

82

[root@node003 ~]#

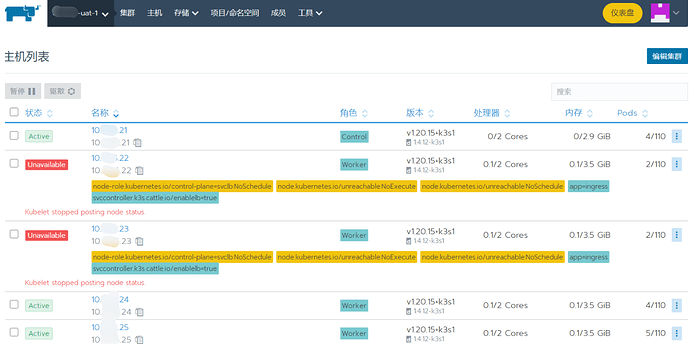

此时,2个节点失联

此时2个lb节点开始出现问题了

2个lb节点可以互相ping通,但是lb节点和其它k3s节点互相不通

[root@node003 ~]# ping 10.10.10.22 -c 2

PING 10.10.10.22 (10.10.10.22) 56(84) bytes of data.

64 bytes from 10.10.10.22: icmp_seq=1 ttl=64 time=0.083 ms

64 bytes from 10.10.10.22: icmp_seq=2 ttl=64 time=0.082 ms

--- 10.10.10.22 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1058ms

rtt min/avg/max/mdev = 0.082/0.082/0.083/0.009 ms

[root@node003 ~]# ping 10.10.10.23 -c 2

PING 10.10.10.23 (10.10.10.23) 56(84) bytes of data.

64 bytes from 10.10.10.23: icmp_seq=1 ttl=64 time=0.121 ms

64 bytes from 10.10.10.23: icmp_seq=2 ttl=64 time=0.111 ms

--- 10.10.10.23 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.111/0.116/0.121/0.005 ms

[root@node003 ~]# ping 10.10.10.24 -c 2

PING 10.10.10.24 (10.10.10.24) 56(84) bytes of data.

From 10.10.10.23 icmp_seq=1 Destination Host Unreachable

From 10.10.10.23 icmp_seq=2 Destination Host Unreachable

--- 10.10.10.24 ping statistics ---

2 packets transmitted, 0 received, +2 errors, 100% packet loss, time 1000ms

pipe 2

[root@node003 ~]# ping 10.10.10.25 -c 2

PING 10.10.10.25 (10.10.10.25) 56(84) bytes of data.

From 10.10.10.23 icmp_seq=1 Destination Host Unreachable

From 10.10.10.23 icmp_seq=2 Destination Host Unreachable

--- 10.10.10.25 ping statistics ---

2 packets transmitted, 0 received, +2 errors, 100% packet loss, time 1001ms

pipe 2

[root@node003 ~]# ping 10.10.10.21 -c 2

PING 10.10.10.21 (10.10.10.21) 56(84) bytes of data.

From 10.10.10.23 icmp_seq=1 Destination Host Unreachable

From 10.10.10.23 icmp_seq=2 Destination Host Unreachable

--- 10.10.10.21 ping statistics ---

2 packets transmitted, 0 received, +2 errors, 100% packet loss, time 1000ms

[root@node003 ~]#

lb节点他们到网关是通的

[root@node003 ~]# ping 10.10.10.254 -c 2

PING 10.10.10.254 (10.10.10.254) 56(84) bytes of data.

64 bytes from 10.10.10.254: icmp_seq=1 ttl=254 time=0.919 ms

64 bytes from 10.10.10.254: icmp_seq=2 ttl=254 time=0.943 ms

--- 10.10.10.254 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.919/0.931/0.943/0.012 ms

[root@node003 ~]#

master节点,可以ping通所有节点,包括这2个lb

[root@node001 ~]# ping 10.10.10.22 -c 2

PING 10.10.10.22 (10.10.10.22) 56(84) bytes of data.

64 bytes from 10.10.10.22: icmp_seq=1 ttl=64 time=0.076 ms

64 bytes from 10.10.10.22: icmp_seq=2 ttl=64 time=0.043 ms

--- 10.10.10.22 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1008ms

rtt min/avg/max/mdev = 0.043/0.059/0.076/0.018 ms

[root@node001 ~]# ping 10.10.10.23 -c 2

PING 10.10.10.23 (10.10.10.23) 56(84) bytes of data.

64 bytes from 10.10.10.23: icmp_seq=1 ttl=64 time=0.089 ms

64 bytes from 10.10.10.23: icmp_seq=2 ttl=64 time=0.077 ms

--- 10.10.10.23 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1004ms

rtt min/avg/max/mdev = 0.077/0.083/0.089/0.006 ms

[root@node001 ~]# ping 10.10.10.24 -c 2

PING 10.10.10.24 (10.10.10.24) 56(84) bytes of data.

64 bytes from 10.10.10.24: icmp_seq=1 ttl=64 time=0.892 ms

64 bytes from 10.10.10.24: icmp_seq=2 ttl=64 time=0.444 ms

--- 10.10.10.24 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1036ms

rtt min/avg/max/mdev = 0.444/0.668/0.892/0.224 ms

[root@node001 ~]#

但是master节点ping这2个节点上的pod不通

[root@node001 ~]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system metrics-server-86cbb8457f-8nls9 1/1 Running 0 90m 192.168.0.2 10.10.10.21 <none> <none>

ingress-nginx ingress-nginx-admission-create-fc9z7 0/1 Completed 0 79m 192.168.3.2 10.10.10.24 <none> <none>

ingress-nginx ingress-nginx-admission-patch-9mlxl 0/1 Completed 1 79m 192.168.4.2 10.10.10.25 <none> <none>

default nginx-5868b9c5cb-dwklw 1/1 Running 0 62m 192.168.3.4 10.10.10.24 <none> <none>

default nginx-5868b9c5cb-ts7ns 1/1 Running 0 62m 192.168.4.4 10.10.10.25 <none> <none>

default nginx-5868b9c5cb-jrvqw 1/1 Running 0 61m 192.168.3.5 10.10.10.24 <none> <none>

default nginx-5868b9c5cb-r4whn 1/1 Running 0 61m 192.168.3.6 10.10.10.24 <none> <none>

default nginx-5868b9c5cb-xzlwv 1/1 Running 0 61m 192.168.4.6 10.10.10.25 <none> <none>

default nginx-5868b9c5cb-b26gh 1/1 Running 0 61m 192.168.4.5 10.10.10.25 <none> <none>

default nginx-5868b9c5cb-9tvrt 1/1 Running 0 61m 192.168.0.4 10.10.10.21 <none> <none>

default nginx-5868b9c5cb-nqkpg 1/1 Running 0 61m 192.168.0.3 10.10.10.21 <none> <none>

fleet-system fleet-agent-5b84678594-b97fs 1/1 Running 0 38m 192.168.4.8 10.10.10.25 <none> <none>

cattle-system cattle-cluster-agent-78698f9b5d-qthsq 1/1 Running 0 39m 192.168.0.6 10.10.10.21 <none> <none>

kube-system coredns-86cd6898c-pmg7q 1/1 Running 0 77m 192.168.3.3 10.10.10.24 <none> <none>

kube-system coredns-86cd6898c-kc9qr 1/1 Running 0 77m 192.168.4.3 10.10.10.25 <none> <none>

ingress-nginx ingress-nginx-controller-glk6k 1/1 Running 0 79m 10.10.10.22 10.10.10.22 <none> <none>

default svclb-nginx-5f499 1/1 Running 0 52m 192.168.1.2 10.10.10.22 <none> <none>

default svclb-nginx-wdhfq 1/1 Running 0 52m 192.168.2.2 10.10.10.23 <none> <none>

ingress-nginx ingress-nginx-controller-9dtt2 1/1 Running 0 79m 10.10.10.23 10.10.10.23 <none> <none>

[root@node001 ~]# ping 192.168.1.2 -c 2

PING 192.168.1.2 (192.168.1.2) 56(84) bytes of data.

^C

--- 192.168.1.2 ping statistics ---

2 packets transmitted, 0 received, 100% packet loss, time 1021ms

[root@node001 ~]#

登录任意一个lb节点

可以看到信息如下

error=“dial tcp 10.10.10.21:6443: connect: no route to host”

[root@node002 ~]# systemctl status k3s-agent -l

● k3s-agent.service - Lightweight Kubernetes

Loaded: loaded (/etc/systemd/system/k3s-agent.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2022-06-24 15:48:23 CST; 20min ago

Docs: https://k3s.io

Process: 20470 ExecStartPre=/sbin/modprobe overlay (code=exited, status=0/SUCCESS)

Process: 20468 ExecStartPre=/sbin/modprobe br_netfilter (code=exited, status=0/SUCCESS)

Main PID: 20472 (k3s-agent)

Tasks: 47

Memory: 537.5M

CGroup: /system.slice/k3s-agent.service

├─ 7849 /var/lib/rancher/k3s/data/77ca12849da9a6f82acce910d05b017c21a69b14f02ef84177a7f6aaa1265e82/bin/containerd-shim-runc-v2 -namespace k8s.io -id c39b7664e8b473fa1afc015cbb2d1776654788e639b35591bc349a24ddf16e52 -address /run/k3s/containerd/containerd.sock

├─13105 /var/lib/rancher/k3s/data/77ca12849da9a6f82acce910d05b017c21a69b14f02ef84177a7f6aaa1265e82/bin/containerd-shim-runc-v2 -namespace k8s.io -id d30a5fc636bd432485284ea06d14836861d8c60997617bb53c1c0cfc560adf64 -address /run/k3s/containerd/containerd.sock

├─20472 /usr/local/bin/k3s agent

└─20497 containerd

Jun 24 16:08:44 node002 k3s[20472]: I0624 16:08:44.225101 20472 trace.go:205] Trace[1868438517]: "Reflector ListAndWatch" name:k8s.io/client-go/informers/factory.go:134 (24-Jun-2022 16:08:17.924) (total time: 26300ms):

Jun 24 16:08:44 node002 k3s[20472]: Trace[1868438517]: [26.300321679s] [26.300321679s] END

Jun 24 16:08:44 node002 k3s[20472]: E0624 16:08:44.225185 20472 reflector.go:138] k8s.io/client-go/informers/factory.go:134: Failed to watch *v1.Service: failed to list *v1.Service: an error on the server ("") has prevented the request from succeeding (get services)

Jun 24 16:08:44 node002 k3s[20472]: E0624 16:08:44.225236 20472 kubelet_node_status.go:470] Error updating node status, will retry: error getting node "10.10.10.22": Get "https://127.0.0.1:35551/api/v1/nodes/10.10.10.22?timeout=10s": context deadline exceeded

Jun 24 16:08:46 node002 k3s[20472]: time="2022-06-24T16:08:46.652388582+08:00" level=info msg="Connecting to proxy" url="wss://10.10.10.21:6443/v1-k3s/connect"

Jun 24 16:08:47 node002 k3s[20472]: time="2022-06-24T16:08:47.796951939+08:00" level=error msg="Failed to connect to proxy" error="dial tcp 10.10.10.21:6443: connect: no route to host"

Jun 24 16:08:47 node002 k3s[20472]: time="2022-06-24T16:08:47.797073565+08:00" level=error msg="Remotedialer proxy error" error="dial tcp 10.10.10.21:6443: connect: no route to host"

Jun 24 16:08:52 node002 k3s[20472]: time="2022-06-24T16:08:52.797336064+08:00" level=info msg="Connecting to proxy" url="wss://10.10.10.21:6443/v1-k3s/connect"

Jun 24 16:08:53 node002 k3s[20472]: time="2022-06-24T16:08:53.940490530+08:00" level=error msg="Failed to connect to proxy" error="dial tcp 10.10.10.21:6443: connect: no route to host"

Jun 24 16:08:53 node002 k3s[20472]: time="2022-06-24T16:08:53.940586504+08:00" level=error msg="Remotedialer proxy error" error="dial tcp 10.10.10.21:6443: connect: no route to host"

[root@node002 ~]#

删除svc,或者说loadblancer

[root@node001 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 105m

nginx LoadBalancer 172.20.172.173 10.10.10.22,10.10.10.23 8080:32233/TCP 73m

[root@node001 ~]# kubectl delete svc nginx

service "nginx" deleted

[root@node001 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 105m

[root@node001 ~]#

此时2个svclb的pod消失之后

[root@node001 ~]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system metrics-server-86cbb8457f-8nls9 1/1 Running 0 107m 192.168.0.2 10.10.10.21 <none> <none>

ingress-nginx ingress-nginx-admission-create-fc9z7 0/1 Completed 0 96m 192.168.3.2 10.10.10.24 <none> <none>

ingress-nginx ingress-nginx-admission-patch-9mlxl 0/1 Completed 1 96m 192.168.4.2 10.10.10.25 <none> <none>

default nginx-5868b9c5cb-dwklw 1/1 Running 0 79m 192.168.3.4 10.10.10.24 <none> <none>

default nginx-5868b9c5cb-ts7ns 1/1 Running 0 79m 192.168.4.4 10.10.10.25 <none> <none>

default nginx-5868b9c5cb-jrvqw 1/1 Running 0 79m 192.168.3.5 10.10.10.24 <none> <none>

default nginx-5868b9c5cb-r4whn 1/1 Running 0 79m 192.168.3.6 10.10.10.24 <none> <none>

default nginx-5868b9c5cb-xzlwv 1/1 Running 0 79m 192.168.4.6 10.10.10.25 <none> <none>

default nginx-5868b9c5cb-b26gh 1/1 Running 0 79m 192.168.4.5 10.10.10.25 <none> <none>

default nginx-5868b9c5cb-9tvrt 1/1 Running 0 79m 192.168.0.4 10.10.10.21 <none> <none>

default nginx-5868b9c5cb-nqkpg 1/1 Running 0 79m 192.168.0.3 10.10.10.21 <none> <none>

fleet-system fleet-agent-5b84678594-b97fs 1/1 Running 0 56m 192.168.4.8 10.10.10.25 <none> <none>

cattle-system cattle-cluster-agent-78698f9b5d-qthsq 1/1 Running 0 56m 192.168.0.6 10.10.10.21 <none> <none>

kube-system coredns-86cd6898c-pmg7q 1/1 Running 0 94m 192.168.3.3 10.10.10.24 <none> <none>

kube-system coredns-86cd6898c-kc9qr 1/1 Running 0 94m 192.168.4.3 10.10.10.25 <none> <none>

ingress-nginx ingress-nginx-controller-9dtt2 1/1 Running 1 96m 10.10.10.23 10.10.10.23 <none> <none>

ingress-nginx ingress-nginx-controller-glk6k 1/1 Running 1 96m 10.10.10.22 10.10.10.22 <none> <none>

[root@node001 ~]#

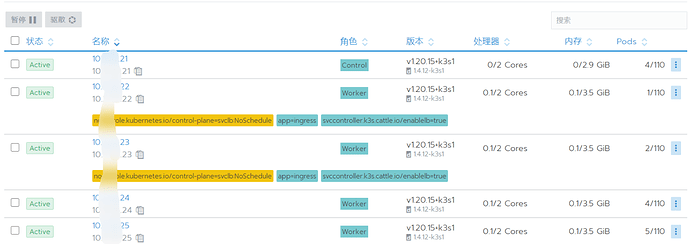

删除svclb的pod之后,lb节点10.10.10.22可以ping通master

[root@node002 ~]# ping 10.10.10.21

PING 10.10.10.21 (10.10.10.21) 56(84) bytes of data.

64 bytes from 10.10.10.21: icmp_seq=1 ttl=64 time=0.453 ms

64 bytes from 10.10.10.21: icmp_seq=2 ttl=64 time=0.456 ms

^C

--- 10.10.10.21 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1018ms

rtt min/avg/max/mdev = 0.453/0.454/0.456/0.021 ms

[root@node002 ~]#

lb节点10.10.10.23也能ping通master节点了

[root@node003 ~]# ping 10.10.10.21 -c 2

PING 10.10.10.21 (10.10.10.21) 56(84) bytes of data.

64 bytes from 10.10.10.21: icmp_seq=1 ttl=64 time=1.32 ms

64 bytes from 10.10.10.21: icmp_seq=2 ttl=64 time=0.353 ms

--- 10.10.10.21 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.353/0.836/1.320/0.484 ms

[root@node003 ~]#

节点恢复正常

如果再次apply nginx.yaml,它又会创建svclb,又会导致lb节点不通

[root@node001 ~]# kubectl apply -f http://file.mydemo.com/tools/k8s-yaml/nginx-dp-lb.yaml

deployment.apps/nginx unchanged

service/nginx created

[root@node001 ~]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system metrics-server-86cbb8457f-8nls9 1/1 Running 0 122m 192.168.0.2 10.10.10.21 <none> <none>

ingress-nginx ingress-nginx-admission-create-fc9z7 0/1 Completed 0 111m 192.168.3.2 10.10.10.24 <none> <none>

ingress-nginx ingress-nginx-admission-patch-9mlxl 0/1 Completed 1 111m 192.168.4.2 10.10.10.25 <none> <none>

default nginx-5868b9c5cb-dwklw 1/1 Running 0 94m 192.168.3.4 10.10.10.24 <none> <none>

default nginx-5868b9c5cb-ts7ns 1/1 Running 0 94m 192.168.4.4 10.10.10.25 <none> <none>

default nginx-5868b9c5cb-jrvqw 1/1 Running 0 93m 192.168.3.5 10.10.10.24 <none> <none>

default nginx-5868b9c5cb-r4whn 1/1 Running 0 93m 192.168.3.6 10.10.10.24 <none> <none>

default nginx-5868b9c5cb-xzlwv 1/1 Running 0 93m 192.168.4.6 10.10.10.25 <none> <none>

default nginx-5868b9c5cb-b26gh 1/1 Running 0 93m 192.168.4.5 10.10.10.25 <none> <none>

default nginx-5868b9c5cb-9tvrt 1/1 Running 0 93m 192.168.0.4 10.10.10.21 <none> <none>

default nginx-5868b9c5cb-nqkpg 1/1 Running 0 93m 192.168.0.3 10.10.10.21 <none> <none>

fleet-system fleet-agent-5b84678594-b97fs 1/1 Running 0 70m 192.168.4.8 10.10.10.25 <none> <none>

cattle-system cattle-cluster-agent-78698f9b5d-qthsq 1/1 Running 0 71m 192.168.0.6 10.10.10.21 <none> <none>

kube-system coredns-86cd6898c-pmg7q 1/1 Running 0 109m 192.168.3.3 10.10.10.24 <none> <none>

kube-system coredns-86cd6898c-kc9qr 1/1 Running 0 109m 192.168.4.3 10.10.10.25 <none> <none>

ingress-nginx ingress-nginx-controller-9dtt2 1/1 Running 1 111m 10.10.10.23 10.10.10.23 <none> <none>

ingress-nginx ingress-nginx-controller-glk6k 1/1 Running 1 111m 10.10.10.22 10.10.10.22 <none> <none>

default svclb-nginx-2fghh 1/1 Running 0 3s 192.168.1.3 10.10.10.22 <none> <none>

default svclb-nginx-v7hmb 1/1 Running 0 3s 192.168.2.3 10.10.10.23 <none> <none>

[root@node001 ~]#

lb节点到master又不通了

[root@node003 ~]# ping 10.10.10.21 -c 2

PING 10.10.10.21 (10.10.10.21) 56(84) bytes of data.

--- 10.10.10.21 ping statistics ---

2 packets transmitted, 0 received, 100% packet loss, time 1043ms

[root@node003 ~]#