今晚在直播演示 K3K 创建共享模式虚拟集群,并且在共享模式虚拟集群中创建 nginx deployment,挂载 pv ,pod 一直是 Pending 状态的说明:

翻车流程:

通过 k3kcli 创建共享模式的虚拟集群:

k3kcli cluster create shared-3

此时,虚拟集群可以创建成功,但是在虚拟集群中部署 示例 yaml 失败:

示例 yaml 如下:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: nginx-data

mountPath: /data

volumes:

- name: nginx-data

persistentVolumeClaim:

claimName: nginx-pvc

---

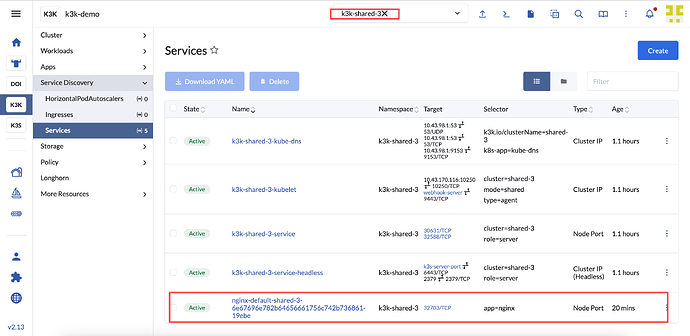

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

type: NodePort

selector:

app: nginx

ports:

- port: 80

targetPort: 80

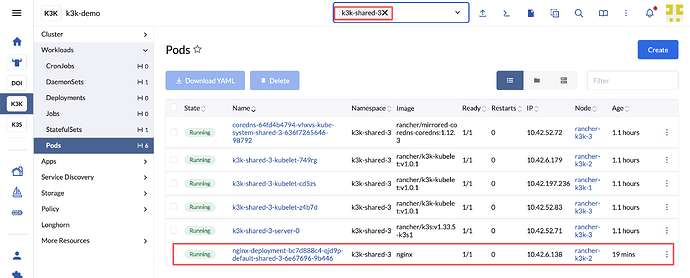

nginx pod 一直是 Pending

hailong ~/meetup k get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-bc7d888c4-dsl7s 0/1 Pending 0 60m

日志如下:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 60m (x3 over 60m) default-scheduler 0/3 nodes are available: pod has unbound immediate PersistentVolumeClaims. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

Warning FailedScheduling 5m55s (x11 over 55m) default-scheduler 0/3 nodes are available: persistentvolumeclaim "nginx-pvc" bound to non-existent persistentvolume "". preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

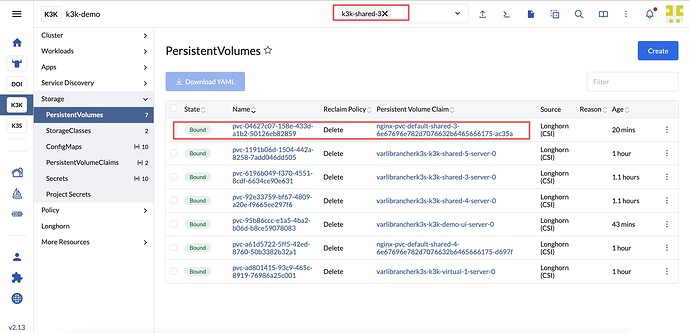

原因分析

该问题的原因是垃圾数据造成,因为我在 demo 之前,有个namespace 的数据忘记清理掉了,这个 namespace 中已经应用过这个 yaml,这个 yaml 和示例 yaml 一模一样,所以 pv 已经挂载到了 storageclass,当在新的 虚拟集群中去应用这个 nginx deployment,就会导致调度失败。

当我清理掉 deployment 和 pvc 等数据之后,虚拟集群的 pod 启动成功,并且 可以在宿主集群上查看到这个 yaml