Rancher Server 设置

- Rancher 版本:v2.7.1

- 安装选项 (Docker install/Helm Chart): Docker install

- 如果是 Helm Chart 安装,需要提供 Local 集群的类型(RKE1, RKE2, k3s, EKS, 等)和版本:

- 在线或离线部署:在线部署

下游集群信息

- Kubernetes 版本: v1.24.17

- Cluster Type (Local/Downstream): Local

- 如果 Downstream,是什么类型的集群?(自定义/导入或为托管 等):

用户信息

- 登录用户的角色是什么? (管理员/集群所有者/集群成员/项目所有者/项目成员/自定义):管理员

- 如果自定义,自定义权限集:

主机操作系统: centos 7.6

问题描述: rancher部署完成,重启docker之后,rancher-agent和rancher-server没法启动,但是k8s正常

重现步骤:

安装完成后,重启docker服务

结果:

- rancher-server日志:

2024/11/21 10:28:38 [INFO] Stopping cluster agent for c-tzm6v

2024/11/21 10:28:38 [ERROR] failed to start cluster controllers c-tzm6v: context canceled

2024/11/21 10:29:49 [ERROR] error syncing 'c-tzm6v': handler cluster-deploy: Get "https://172.17.110.83:6443/apis/apps/v1/namespaces/cattle-system/daemonsets/cattle-node-agent": cluster agent disconnected, requeuing

2024/11/21 10:30:47 [INFO] Stopping cluster agent for c-tzm6v

2024/11/21 10:30:47 [ERROR] failed to start cluster controllers c-tzm6v: context canceled

2024/11/21 10:32:33 [ERROR] error syncing 'c-tzm6v': handler cluster-deploy: Get "https://172.17.110.83:6443/apis/apps/v1/namespaces/cattle-system/daemonsets/cattle-node-agent": cluster agent disconnected, requeuing

2024/11/21 10:32:45 [INFO] Stopping cluster agent for c-tzm6v

2024/11/21 10:32:45 [ERROR] failed to start cluster controllers c-tzm6v: context canceled

2024/11/21 10:34:44 [INFO] Stopping cluster agent for c-tzm6v

2024/11/21 10:34:44 [ERROR] failed to start cluster controllers c-tzm6v: context canceled

2024/11/21 10:35:19 [ERROR] error syncing 'c-tzm6v': handler cluster-deploy: Get "https://172.17.110.83:6443/apis/apps/v1/namespaces/cattle-system/daemonsets/cattle-node-agent": cluster agent disconnected, requeuing

2024/11/21 10:36:59 [INFO] Stopping cluster agent for c-tzm6v

2024/11/21 10:36:59 [ERROR] failed to start cluster controllers c-tzm6v: context canceled

2024/11/21 10:38:03 [ERROR] error syncing 'c-tzm6v': handler cluster-deploy: Get "https://172.17.110.83:6443/apis/apps/v1/namespaces/cattle-system/daemonsets/cattle-node-agent": cluster agent disconnected, requeuing

2024/11/21 10:38:57 [INFO] Stopping cluster agent for c-tzm6v

2024/11/21 10:38:57 [ERROR] failed to start cluster controllers c-tzm6v: context canceled

2024/11/21 10:40:45 [ERROR] error syncing 'c-tzm6v': handler cluster-deploy: Get "https://172.17.110.83:6443/apis/apps/v1/namespaces/cattle-system/daemonsets/cattle-node-agent": cluster agent disconnected, requeuing

2024/11/21 10:41:13 [INFO] Stopping cluster agent for c-tzm6v

2024/11/21 10:41:13 [ERROR] failed to start cluster controllers c-tzm6v: context canceled

2024/11/21 10:43:13 [INFO] Stopping cluster agent for c-tzm6v

2024/11/21 10:43:13 [ERROR] failed to start cluster controllers c-tzm6v: context canceled

2024/11/21 10:43:28 [ERROR] error syncing 'c-tzm6v': handler cluster-deploy: Get "https://172.17.110.83:6443/apis/apps/v1/namespaces/cattle-system/daemonsets/cattle-node-agent": cluster agent disconnected, requeuing

- k8s中cattle-system情况:

# kubectl get pod -n cattle-system

NAME READY STATUS RESTARTS AGE

cattle-cluster-agent-5d68fb6f84-dlzbw 0/1 CrashLoopBackOff 3991 (4m14s ago) 25d

cattle-cluster-agent-5d68fb6f84-kdwtt 0/1 CrashLoopBackOff 3991 (38s ago) 25d

cattle-node-agent-46vb4 0/1 CrashLoopBackOff 7287 (3m56s ago) 25d

cattle-node-agent-d9tc7 0/1 CrashLoopBackOff 2753 (4m53s ago) 25d

cattle-node-agent-zvkb4 1/1 Running 1 (14d ago) 25d

kube-api-auth-sph98 1/1 Running 1 (14d ago) 25d

- 状态为CrashLoopBackOff 的agent的日志:

# kubectl logs -f cattle-node-agent-46vb4 -n cattle-system

INFO: Environment: CATTLE_ADDRESS=172.17.110.83 CATTLE_AGENT_CONNECT=true CATTLE_CA_CHECKSUM=59d47373e00f43a89a159a7ad4d39422ee453bde24c638879144c3e2cc8c47ac CATTLE_CLUSTER=false CATTLE_CLUSTER_AGENT_PORT=tcp://10.43.134.61:80 CATTLE_CLUSTER_AGENT_PORT_443_TCP=tcp://10.43.134.61:443 CATTLE_CLUSTER_AGENT_PORT_443_TCP_ADDR=10.43.134.61 CATTLE_CLUSTER_AGENT_PORT_443_TCP_PORT=443 CATTLE_CLUSTER_AGENT_PORT_443_TCP_PROTO=tcp CATTLE_CLUSTER_AGENT_PORT_80_TCP=tcp://10.43.134.61:80 CATTLE_CLUSTER_AGENT_PORT_80_TCP_ADDR=10.43.134.61 CATTLE_CLUSTER_AGENT_PORT_80_TCP_PORT=80 CATTLE_CLUSTER_AGENT_PORT_80_TCP_PROTO=tcp CATTLE_CLUSTER_AGENT_SERVICE_HOST=10.43.134.61 CATTLE_CLUSTER_AGENT_SERVICE_PORT=80 CATTLE_CLUSTER_AGENT_SERVICE_PORT_HTTP=80 CATTLE_CLUSTER_AGENT_SERVICE_PORT_HTTPS_INTERNAL=443 CATTLE_INGRESS_IP_DOMAIN=sslip.io CATTLE_INSTALL_UUID=b34f3bbf-c3bc-4774-9028-0da1faa24044 CATTLE_INTERNAL_ADDRESS= CATTLE_K8S_MANAGED=true CATTLE_NODE_NAME=app1 CATTLE_SERVER=https://172.17.110.83:443 CATTLE_SERVER_VERSION=v2.7.1

INFO: Using resolv.conf: nameserver 211.137.160.5

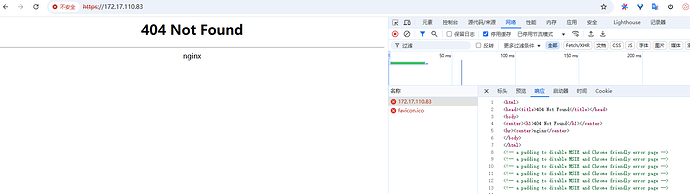

ERROR: https://172.17.110.83:443/ping is not accessible (The requested URL returned error: 404)

- 状态为Running的agent日志:

0.83 because it doesn't contain any IP SANs"

time="2024-11-21T10:48:51Z" level=error msg="Remotedialer proxy error" error="x509: cannot validate certificate for 172.17.110.83 because it doesn't contain any IP SANs"

time="2024-11-21T10:49:01Z" level=info msg="Connecting to wss://172.17.110.83:443/v3/connect with token starting with mmb5nhsscrwrjhs8z2l6h8jhs6c"

time="2024-11-21T10:49:01Z" level=info msg="Connecting to proxy" url="wss://172.17.110.83:443/v3/connect"

time="2024-11-21T10:49:01Z" level=error msg="Failed to connect to proxy. Empty dialer response" error="x509: cannot validate certificate for 172.17.110.83 because it doesn't contain any IP SANs"

time="2024-11-21T10:49:01Z" level=error msg="Remotedialer proxy error" error="x509: cannot validate certificate for 172.17.110.83 because it doesn't contain any IP SANs"

time="2024-11-21T10:49:11Z" level=info msg="Connecting to wss://172.17.110.83:443/v3/connect with token starting with mmb5nhsscrwrjhs8z2l6h8jhs6c"

time="2024-11-21T10:49:11Z" level=info msg="Connecting to proxy" url="wss://172.17.110.83:443/v3/connect"

time="2024-11-21T10:49:11Z" level=error msg="Failed to connect to proxy. Empty dialer response" error="x509: cannot validate certificate for 172.17.110.83 because it doesn't contain any IP SANs"

time="2024-11-21T10:49:11Z" level=error msg="Remotedialer proxy error" error="x509: cannot validate certificate for 172.17.110.83 because it doesn't contain any IP SANs"