Rancher Server 设置

- Rancher 版本:

- 安装选项 (Docker install/Helm Chart):

helm chart 安装,local 集群为 rke,版本为 v1.2.6- 如果是 Helm Chart 安装,需要提供 Local 集群的类型(RKE1, RKE2, k3s, EKS, 等)和版本:

- 在线或离线部署:离线安装

下游集群信息

- Kubernetes 版本:

- Cluster Type (Local/Downstream):

- 如果 Downstream,是什么类型的集群?(自定义/导入或为托管 等):

- 大量导入的 k3s 集群,版本为 1.20.4

用户信息

- 登录用户的角色是什么? 各类用户均存在登录卡顿个问题

主机操作系统:

centos 7.9

问题描述:

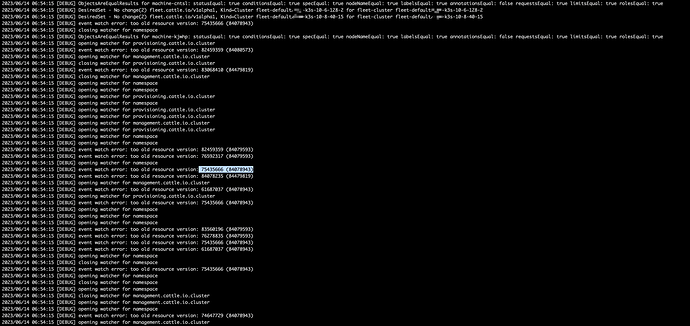

rancher 的debug 日志中,存在大量的 event watch error: too old resource version 日志,给 kubernetes 集群带来了非常高的压力,节点的 16 个核全部拉满。

重现步骤:

无

结果:

无

预期结果:

截图:

其他上下文信息:

日志

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 75435666 (84078943)

2023/06/14 06:54:15 [DEBUG] closing watcher for namespace

2023/06/14 06:54:15 [DEBUG] ObjectsAreEqualResults for machine-kjwhp: statusEqual: true conditionsEqual: true specEqual: true nodeNameEqual: true labelsEqual: true annotationsEqual: false requestsEqual: true limitsEqual: true rolesEqual: true

2023/06/14 06:54:15 [DEBUG] opening watcher for provisioning.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 82459359 (84080573)

2023/06/14 06:54:15 [DEBUG] opening watcher for management.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] closing watcher for provisioning.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 83068410 (84479819)

2023/06/14 06:54:15 [DEBUG] closing watcher for management.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] opening watcher for provisioning.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] opening watcher for provisioning.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] opening watcher for provisioning.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] opening watcher for management.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] opening watcher for provisioning.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 82459359 (84079593)

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 76592317 (84079593)

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 75435666 (84078943)

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 84078235 (84479819)

2023/06/14 06:54:15 [DEBUG] opening watcher for management.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 61687037 (84078943)

2023/06/14 06:54:15 [DEBUG] opening watcher for provisioning.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 75435666 (84078943)

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 83560196 (84079593)

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 76278835 (84079593)

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 75435666 (84078943)

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 61687037 (84078943)

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] closing watcher for namespace

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 75435666 (84078943)

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] closing watcher for namespace

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] closing watcher for management.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] opening watcher for management.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] opening watcher for namespace

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 74647729 (84078943)

2023/06/14 06:54:15 [DEBUG] opening watcher for management.cattle.io.cluster

2023/06/14 06:54:15 [DEBUG] event watch error: too old resource version: 83273510 (84479819)

kubernetes apiserver 日志中存在大量的 Start watching …

[root@master ~]# ls -alh 32888ee73579338a93e2c32cd7fb3cca334994ae8302f4edde0539a2a5fab2f1-json.log.2

-rw-r----- 1 root root 977M 6月 14 15:04 32888ee73579338a93e2c32cd7fb3cca334994ae8302f4edde0539a2a5fab2f1-json.log.2

[root@master ~]# cat 32888ee73579338a93e2c32cd7fb3cca334994ae8302f4edde0539a2a5fab2f1-json.log.2 |grep "Starting watch" | wc -l

1483704

[root@master ~]#

apiserver 配置如下:

kube-apiserver --storage-backend=etcd3 --feature-gates=RemoveSelfLink=false --audit-log-format=json --audit-policy-file=/etc/kubernetes/audit-policy.yaml --proxy-client-cert-file=/etc/kubernetes/ssl/kube-apiserver-proxy-client.pem --service-account-key-file=/etc/kubernetes/ssl/kube-service-account-token-key.pem --service-cluster-ip-range=10.43.0.0/16 --v=4 --proxy-client-key-file=/etc/kubernetes/ssl/kube-apiserver-proxy-client-key.pem --insecure-port=0 --audit-log-maxage=30 --requestheader-username-headers=X-Remote-User --profiling=true --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota,NodeRestriction,Priority,TaintNodesByCondition,PersistentVolumeClaimResize --watch-cache-sizes=pod#600 --etcd-certfile=/etc/kubernetes/ssl/kube-node.pem --etcd-servers=https://10.6.124.133:2379,https://10.6.124.131:2379,https://10.6.124.132:2379 --tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem --audit-log-path=/var/log/kube-audit/audit-log.json --audit-log-maxsize=100 --cloud-provider= --tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem --service-account-signing-key-file=/etc/kubernetes/ssl/kube-service-account-token-key.pem --service-account-issuer=rke --service-account-lookup=true --allow-privileged=true --default-watch-cache-size=1000 --etcd-cafile=/etc/kubernetes/ssl/kube-ca.pem --etcd-keyfile=/etc/kubernetes/ssl/kube-node-key.pem --kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem --tls-cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305 --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --bind-address=0.0.0.0 --api-audiences=unknown --authorization-mode=Node,RBAC --requestheader-allowed-names=kube-apiserver-proxy-client --etcd-prefix=/registry --runtime-config=authorization.k8s.io/v1beta1=true --requestheader-group-headers=X-Remote-Group --anonymous-auth=false --audit-log-maxbackup=10 --delete-collection-workers=10 --kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem --requestheader-client-ca-file=/etc/kubernetes/ssl/kube-apiserver-requestheader-ca.pem --requestheader-extra-headers-prefix=X-Remote-Extra- --advertise-address=10.6.124.133 --client-ca-file=/etc/kubernetes/ssl/kube-ca.pem --service-node-port-range=1-65535 --secure-port=6443