环境信息:

节点 CPU 架构,操作系统和版本:#92-Ubuntu SMP Mon Aug 14 09:30:42 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux

集群配置:

问题描述:

重现步骤:

“集群管理-集群”页面点击“创建”按钮

选择“自定义”,下一个页面输入集群名称

打开“镜像仓库”,Mirror部分添加了docker.io → https://hub-mirror.c.163.com

点击“创建”

创建完成后自动跳转到新建集群的“注册”页面,勾选“ETCD”和“control Plane”以及注册命令部分的“不安全……”选项

复制curl命令粘贴到自己新准备的Ubuntu主机,登录root账户,在终端中粘贴命令并按回车执行

执行时终端输出的信息如下:

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 30869 0 30869 0 0 1866k 0 --:--:-- --:--:-- --:--:-- 1884k

[INFO] Label: cattle.io/os=linux

[INFO] Role requested: etcd

[INFO] Role requested: controlplane

[INFO] Using default agent configuration directory /etc/rancher/agent

[INFO] Using default agent var directory /var/lib/rancher/agent

[INFO] Determined CA is necessary to connect to Rancher

[INFO] Successfully downloaded CA certificate

[INFO] Value from https://xxx.com/cacerts is an x509 certificate

[INFO] Successfully tested Rancher connection

[INFO] Downloading rancher-system-agent binary from https://xxx.com/assets/rancher-system-agent-amd64

[INFO] Successfully downloaded the rancher-system-agent binary.

[INFO] Downloading rancher-system-agent-uninstall.sh script from https://xxx.com/assets/system-agent-uninstall.sh

[INFO] Successfully downloaded the rancher-system-agent-uninstall.sh script.

[INFO] Generating Cattle ID

[INFO] Successfully downloaded Rancher connection information

[INFO] systemd: Creating service file

[INFO] Creating environment file /etc/systemd/system/rancher-system-agent.env

[INFO] Enabling rancher-system-agent.service

Created symlink /etc/systemd/system/multi-user.target.wants/rancher-system-agent.service → /etc/systemd/system/rancher-system-agent.service.

[INFO] Starting/restarting rancher-system-agent.service

新建的rancher-system-agent.service状态如下:

● rancher-system-agent.service - Rancher System Agent

Loaded: loaded (/etc/systemd/system/rancher-system-agent.service; enabled; vendor preset: enabled)

Active: active (running) since Thu 2023-09-14 10:12:46 CST; 5min ago

Docs: https://www.rancher.com

Main PID: 2504 (rancher-system-)

Tasks: 9 (limit: 9388)

Memory: 9.3M

CPU: 105ms

CGroup: /system.slice/rancher-system-agent.service

└─2504 /usr/local/bin/rancher-system-agent sentinel

Sep 14 10:12:46 zhjw-master-01 systemd[1]: Started Rancher System Agent.

Sep 14 10:12:46 zhjw-master-01 rancher-system-agent[2504]: time="2023-09-14T10:12:46+08:00" level=info msg="Rancher System Agent version v0.3.3 (9e827a5) is starting"

Sep 14 10:12:46 zhjw-master-01 rancher-system-agent[2504]: time="2023-09-14T10:12:46+08:00" level=info msg="Using directory /var/lib/rancher/agent/work for work"

Sep 14 10:12:46 zhjw-master-01 rancher-system-agent[2504]: time="2023-09-14T10:12:46+08:00" level=info msg="Starting remote watch of plans"

Sep 14 10:12:46 zhjw-master-01 rancher-system-agent[2504]: E0914 10:12:46.649883 2504 memcache.go:206] couldn't get resource list for management.cattle.io/v3:

Sep 14 10:12:46 zhjw-master-01 rancher-system-agent[2504]: time="2023-09-14T10:12:46+08:00" level=info msg="Starting /v1, Kind=Secret controller"

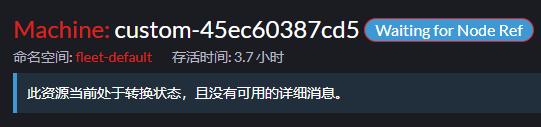

集群管理页面新注册的主机信息如下:

需要补充上下文,节点中 rke2-server 服务状态是怎么样的

systemctl status rke2-server

如果服务不存在,则尝试重建集群,在UI上删除集群,在节点上运行以下命令卸载system-agent

rancher-system-agent-uninstall.sh

我按你说的删掉了,然后照着上面的步骤重新操作了一遍还是没有rke2-server,是我哪里漏了吗……

尝试开启 rancher-system-agent debug日志,并重启rancher-system-agent:

cat >>/etc/systemd/system/rancher-system-agent.env<<EOF

RANCHER_DEBUG=true

CATTLE_DEBUG=true

CATTLE_LOGLEVEL=debug

EOF

另外网络环境是怎么样的?是否有外网访问?我看你说使用了mirror,可以将配置发出来看看

开启 rancher-system-agent debug这个是要在我待注册的那个主机上操作吗?

apiVersion: provisioning.cattle.io/v1

kind: Cluster

metadata:

annotations:

field.cattle.io/creatorId: user-xkmj8

field.cattle.io/description: xx集群

creationTimestamp: '2023-09-14T06:11:48Z'

finalizers:

- wrangler.cattle.io/provisioning-cluster-remove

- wrangler.cattle.io/rke-cluster-remove

- wrangler.cattle.io/cloud-config-secret-remover

generation: 2

labels:

location: zhjw

managedFields:

- apiVersion: provisioning.cattle.io/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:field.cattle.io/description: {}

f:finalizers:

.: {}

v:"wrangler.cattle.io/provisioning-cluster-remove": {}

v:"wrangler.cattle.io/rke-cluster-remove": {}

f:labels:

.: {}

f:location: {}

f:spec:

.: {}

f:kubernetesVersion: {}

f:localClusterAuthEndpoint: {}

f:rkeConfig:

.: {}

f:chartValues:

.: {}

f:rke2-calico: {}

f:etcd:

.: {}

f:snapshotRetention: {}

f:snapshotScheduleCron: {}

f:machineGlobalConfig:

.: {}

f:cni: {}

f:disable-kube-proxy: {}

f:etcd-expose-metrics: {}

f:machinePoolDefaults: {}

f:machineSelectorConfig: {}

f:registries:

.: {}

f:mirrors:

.: {}

f:docker.io:

.: {}

f:endpoint: {}

f:upgradeStrategy:

.: {}

f:controlPlaneConcurrency: {}

f:controlPlaneDrainOptions:

.: {}

f:deleteEmptyDirData: {}

f:disableEviction: {}

f:enabled: {}

f:force: {}

f:gracePeriod: {}

f:ignoreDaemonSets: {}

f:ignoreErrors: {}

f:postDrainHooks: {}

f:preDrainHooks: {}

f:skipWaitForDeleteTimeoutSeconds: {}

f:timeout: {}

f:workerConcurrency: {}

f:workerDrainOptions:

.: {}

f:deleteEmptyDirData: {}

f:disableEviction: {}

f:enabled: {}

f:force: {}

f:gracePeriod: {}

f:ignoreDaemonSets: {}

f:ignoreErrors: {}

f:postDrainHooks: {}

f:preDrainHooks: {}

f:skipWaitForDeleteTimeoutSeconds: {}

f:timeout: {}

manager: rancher

operation: Update

time: '2023-09-14T06:11:48Z'

- apiVersion: provisioning.cattle.io/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:finalizers:

v:"wrangler.cattle.io/cloud-config-secret-remover": {}

manager: rancher-v2.7.6-secret-migrator

operation: Update

time: '2023-09-14T06:11:48Z'

- apiVersion: provisioning.cattle.io/v1

fieldsType: FieldsV1

fieldsV1:

f:status:

.: {}

f:clusterName: {}

f:conditions: {}

f:observedGeneration: {}

manager: rancher

operation: Update

subresource: status

time: '2023-09-14T06:12:00Z'

name: zhjw

namespace: fleet-default

resourceVersion: '3888313'

uid: ea34cf7c-9267-49ed-a1dd-445870127d5d

spec:

kubernetesVersion: v1.26.8+rke2r1

localClusterAuthEndpoint: {}

rkeConfig:

chartValues:

rke2-calico: {}

etcd:

snapshotRetention: 5

snapshotScheduleCron: 0 */5 * * *

machineGlobalConfig:

cni: calico

disable-kube-proxy: false

etcd-expose-metrics: false

machinePoolDefaults: {}

machineSelectorConfig:

- config:

protect-kernel-defaults: false

registries:

mirrors:

docker.io:

endpoint:

- https://hub-mirror.c.163.com

upgradeStrategy:

controlPlaneConcurrency: '1'

controlPlaneDrainOptions:

deleteEmptyDirData: true

disableEviction: false

enabled: false

force: false

gracePeriod: -1

ignoreDaemonSets: true

ignoreErrors: false

postDrainHooks: null

preDrainHooks: null

skipWaitForDeleteTimeoutSeconds: 0

timeout: 120

workerConcurrency: '1'

workerDrainOptions:

deleteEmptyDirData: true

disableEviction: false

enabled: false

force: false

gracePeriod: -1

ignoreDaemonSets: true

ignoreErrors: false

postDrainHooks: null

preDrainHooks: null

skipWaitForDeleteTimeoutSeconds: 0

timeout: 120

status:

clusterName: c-m-9pr99v4q

conditions:

- lastUpdateTime: '2023-09-14T06:11:48Z'

reason: Reconciling

status: 'True'

type: Reconciling

- lastUpdateTime: '2023-09-14T06:11:48Z'

status: 'False'

type: Stalled

- lastUpdateTime: '2023-09-14T06:12:00Z'

status: 'True'

type: Created

- lastUpdateTime: '2023-09-14T06:11:50Z'

status: 'True'

type: RKECluster

- lastUpdateTime: '2023-09-14T06:11:48Z'

status: 'True'

type: BackingNamespaceCreated

- lastUpdateTime: '2023-09-14T06:11:48Z'

status: 'True'

type: DefaultProjectCreated

- lastUpdateTime: '2023-09-14T06:11:48Z'

status: 'True'

type: SystemProjectCreated

- lastUpdateTime: '2023-09-14T06:11:49Z'

status: 'True'

type: InitialRolesPopulated

- lastUpdateTime: '2023-09-14T06:11:50Z'

message: >-

waiting for at least one control plane, etcd, and worker node to be

registered

reason: Waiting

status: Unknown

type: Updated

- lastUpdateTime: '2023-09-14T06:11:50Z'

message: >-

waiting for at least one control plane, etcd, and worker node to be

registered

reason: Waiting

status: Unknown

type: Provisioned

- lastUpdateTime: '2023-09-14T06:11:50Z'

message: >-

waiting for at least one control plane, etcd, and worker node to be

registered

reason: Waiting

status: Unknown

type: Ready

- lastUpdateTime: '2023-09-14T06:11:49Z'

status: 'True'

type: CreatorMadeOwner

- lastUpdateTime: '2023-09-14T06:11:49Z'

status: 'True'

type: NoDiskPressure

- lastUpdateTime: '2023-09-14T06:11:49Z'

status: 'True'

type: NoMemoryPressure

- lastUpdateTime: '2023-09-14T06:11:49Z'

status: 'True'

type: SecretsMigrated

- lastUpdateTime: '2023-09-14T06:11:49Z'

status: 'True'

type: ServiceAccountSecretsMigrated

- lastUpdateTime: '2023-09-14T06:11:49Z'

status: 'True'

type: RKESecretsMigrated

- lastUpdateTime: '2023-09-14T06:11:49Z'

status: 'True'

type: ACISecretsMigrated

- lastUpdateTime: '2023-09-14T06:12:00Z'

status: 'False'

type: Connected

observedGeneration: 2

已开启debug,并重启了服务,现在的日志输出如下:

Sep 14 14:24:18 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:18+08:00" level=info msg="Rancher System Agent version v0.3.3 (9e827a5) is starting"

Sep 14 14:24:18 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:18+08:00" level=info msg="Using directory /var/lib/rancher/agent/work for work"

Sep 14 14:24:18 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:18+08:00" level=debug msg="Instantiated new image utility with imagesDir: /var/lib/rancher/agent/images, imageCredentialProvider>Sep 14 14:24:18 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:18+08:00" level=info msg="Starting remote watch of plans"

Sep 14 14:24:18 zhjw-master-01 rancher-system-agent[1995]: E0914 14:24:18.758795 1995 memcache.go:206] couldn't get resource list for management.cattle.io/v3:

Sep 14 14:24:18 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:18+08:00" level=info msg="Starting /v1, Kind=Secret controller"

Sep 14 14:24:18 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:18+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:24:23 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:23+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:24:28 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:28+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:24:33 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:33+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:24:38 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:38+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:24:43 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:43+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:24:48 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:48+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:24:53 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:53+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:24:58 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:24:58+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:25:03 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:25:03+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:25:08 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:25:08+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:25:13 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:25:13+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:25:18 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:25:18+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:25:23 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:25:23+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:25:28 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:25:28+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:25:33 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:25:33+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:25:38 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:25:38+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:25:43 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:25:43+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:25:48 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:25:48+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:25:53 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:25:53+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:25:58 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:25:58+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:26:03 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:26:03+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:26:08 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:26:08+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:26:13 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:26:13+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:26:18 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:26:18+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:26:23 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:26:23+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:26:28 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:26:28+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:26:33 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:26:33+08:00" level=debug msg="[K8s] Processing secret custom-ce4edce91539-machine-plan in namespace fleet-default at generation 0 w>Sep 14 14:26:38 zhjw-master-01 rancher-system-agent[1995]: time="2023-09-14T14:26:38+08:00" level=debug msg="[K8s] Processing secret custo

这时需要开启 rancher debug 日志进行排查,如果是docker run方式启动的集群,则

docker exec -it <rancher-container-id> loglevel --set debug

然后截取大概5分钟的日志上来看下。

另外新创建的集群下节点状态是不是依然是 waiting for nodeRef ?

是的,依然是waiting for nodeRef。

需要开启rancher的debug日志,所以是需要在 rancher容器内部 运行

loglevel --set debug

是这些日志吗?

2023/09/14 07:09:04 [DEBUG] Wrote ping

2023/09/14 07:09:04 [DEBUG] Wrote ping

2023/09/14 07:09:09 [DEBUG] Wrote ping

2023/09/14 07:09:09 [DEBUG] Wrote ping

2023/09/14 07:09:10 [DEBUG] DesiredSet - No change(2) provisioning.cattle.io/v1, Kind=Cluster fleet-local/local for provisioning-cluster-create local

2023/09/14 07:09:10 [DEBUG] local(6e8a0875-36db-489c-b69f-63e3ce2907b5): (2566853955 * 3) / 4294967295 = [10.42.0.98 10.42.1.68 10.42.2.85][1] = 10.42.1.68, self = 10.42.2.85

2023/09/14 07:09:10 [DEBUG] DesiredSet - No change(2) management.cattle.io/v3, Kind=ClusterRoleTemplateBinding local/local-fleet-local-owner for cluster-create fleet-local/local

2023/09/14 07:09:10 [DEBUG] DesiredSet - No change(2) fleet.cattle.io/v1alpha1, Kind=ClusterGroup fleet-local/default for fleet-cluster fleet-local/local

2023/09/14 07:09:10 [DEBUG] DesiredSet - No change(2) fleet.cattle.io/v1alpha1, Kind=Cluster fleet-local/local for fleet-cluster fleet-local/local

2023/09/14 07:08:59 [DEBUG] Wrote ping

2023/09/14 07:08:59 [DEBUG] Wrote ping

2023/09/14 07:09:04 [DEBUG] Wrote ping

2023/09/14 07:09:04 [DEBUG] Wrote ping

2023/09/14 07:09:09 [DEBUG] Wrote ping

2023/09/14 07:09:09 [DEBUG] Wrote ping

2023/09/14 07:09:10 [DEBUG] ObjectsAreEqualResults for machine-264fk: statusEqual: true conditionsEqual: false specEqual: true nodeNameEqual: true labelsEqual: true annotationsEqual: true requestsEqual: true limitsEqual: true rolesEqual: true

2023/09/14 07:09:10 [DEBUG] ObjectsAreEqualResults for machine-264fk: statusEqual: true conditionsEqual: false specEqual: true nodeNameEqual: true labelsEqual: true annotationsEqual: true requestsEqual: true limitsEqual: true rolesEqual: true

2023/09/14 07:09:10 [DEBUG] Updating machine for node [rancher-01]

2023/09/14 07:09:10 [DEBUG] Updated machine for node [rancher-01]

2023/09/14 07:08:49 [DEBUG] Wrote ping

2023/09/14 07:08:49 [DEBUG] Wrote ping

2023/09/14 07:08:54 [DEBUG] Wrote ping

2023/09/14 07:08:54 [DEBUG] Wrote ping

2023/09/14 07:08:59 [DEBUG] Wrote ping

2023/09/14 07:08:59 [DEBUG] Wrote ping

2023/09/14 07:09:04 [DEBUG] Wrote ping

2023/09/14 07:09:04 [DEBUG] Wrote ping

2023/09/14 07:09:09 [DEBUG] Wrote ping

2023/09/14 07:09:09 [DEBUG] Wrote ping

我在执行Rancher UI提供的注册命令之前需要提前安装些什么东西吗?我那个主机就是个全新的Ubuntu,什么都没装的

看起来你是高可用的rancher部署,看看能不能在所有rancher 容器内都设置debug日志,并采集出来吧

三个容器我都已经设置了debug了,这是刚打印的日志:

2023/09/14 07:26:19 [DEBUG] Wrote ping

2023/09/14 07:26:24 [DEBUG] Wrote ping

2023/09/14 07:26:24 [DEBUG] Wrote ping

2023/09/14 07:26:29 [DEBUG] Wrote ping

2023/09/14 07:26:29 [DEBUG] Wrote ping

HEAD is now at f2ea576 Merge pull request #882 from nflondo/main

HEAD is now at 3c78b5a Update Cilium to v1.14.1

2023/09/14 07:26:34 [DEBUG] Wrote ping

2023/09/14 07:26:34 [DEBUG] Wrote ping

2023/09/14 07:26:39 [DEBUG] Wrote ping

2023/09/14 07:26:29 [DEBUG] Triggering auth refresh on u-vrtps3d7wq

2023/09/14 07:26:29 [DEBUG] Searching for providerID for selector rke.cattle.io/machine=0121e450-6231-4dab-8b08-815cf3963258 in cluster fleet-default/zhjw, machine custom-ce4edce91539: {"Code":{"Code":"Forbidden","Status":403},"Message":"clusters.management.cattle.io \"c-m-9pr99v4q\" is forbidden: User \"u-vrtps3d7wq\" cannot get resource \"clusters\" in API group \"management.cattle.io\" at the cluster scope","Cause":null,"FieldName":""} (get nodes)

2023/09/14 07:26:29 [DEBUG] Skipping refresh for system-user u-vrtps3d7wq

HEAD is now at f2ea576 Merge pull request #882 from nflondo/main

HEAD is now at 3c78b5a Update Cilium to v1.14.1

2023/09/14 07:26:33 [DEBUG] local(6e8a0875-36db-489c-b69f-63e3ce2907b5): (2566853955 * 3) / 4294967295 = [10.42.0.98 10.42.1.68 10.42.2.85][1] = 10.42.1.68, self = 10.42.2.85

2023/09/14 07:26:34 [DEBUG] Wrote ping

2023/09/14 07:26:34 [DEBUG] Wrote ping

2023/09/14 07:26:39 [DEBUG] Wrote ping

2023/09/14 07:26:29 [DEBUG] local(6e8a0875-36db-489c-b69f-63e3ce2907b5): (2566853955 * 3) / 4294967295 = [10.42.0.98 10.42.1.68 10.42.2.85][1] = 10.42.1.68, self = 10.42.1.68

2023/09/14 07:26:29 [DEBUG] Wrote ping

2023/09/14 07:26:29 [DEBUG] Wrote ping

HEAD is now at f2ea576 Merge pull request #882 from nflondo/main

HEAD is now at 3c78b5a Update Cilium to v1.14.1

2023/09/14 07:26:34 [DEBUG] Wrote ping

2023/09/14 07:26:34 [DEBUG] Wrote ping

DreamParadise:

10.42.2.85

这个节点的rancher日志,需要更多才能继续排查的,把这个容器的日志存成文件发上来吧

这里好像没办法直接上传文件,你点开下面的链接看下10.42.2.85.log

需要这两个内容的返回,在local集群中运行:

kubectl get configmap -n kube-system cattle-controllers -o yaml

kubectl get lease -n kube-system cattle-controllers -o yaml

configmap返回:

apiVersion: v1

kind: ConfigMap

metadata:

annotations:

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"rancher-f965dc5b4-stxnb","leaseDurationSeconds":45,"acquireTime":"2023-09-11T08:52:15Z","renewTime":"2023-09-14T09:13:09Z","leaderTransitions":7}'

creationTimestamp: "2023-09-09T02:57:15Z"

name: cattle-controllers

namespace: kube-system

resourceVersion: "3969068"

uid: 95aed5c8-9152-4989-a5a8-41995c54ee5e

lease返回:

apiVersion: coordination.k8s.io/v1

kind: Lease

metadata:

creationTimestamp: "2023-09-09T02:57:15Z"

name: cattle-controllers

namespace: kube-system

resourceVersion: "3969520"

uid: 30922171-7a35-4518-8c3d-d41c9d9c83be

spec:

acquireTime: "2023-09-11T08:52:15.000000Z"

holderIdentity: rancher-f965dc5b4-stxnb

leaseDurationSeconds: 45

leaseTransitions: 7

renewTime: "2023-09-14T09:14:09.688841Z"

经过线下排查,集群无法初始化,是因为只添加了 etcd和control plane节点,需要额外一个 worker角色节点集群才会正常开启初始化过程

1 个赞