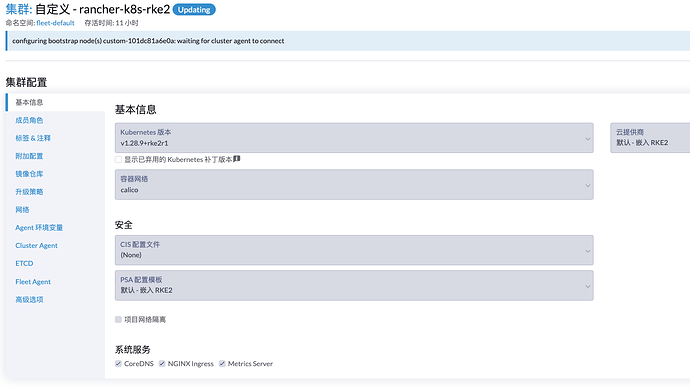

Rancher Server 设置

- Rancher 版本:v1.28.9+k3s1

- 安装选项 (Docker install/Helm Chart): Helm Chart 安装

- 如果是 Helm Chart 安装,需要提供 Local 集群的类型(RKE1, RKE2, k3s, EKS, 等)和版本:

- 在线或离线部署:在线

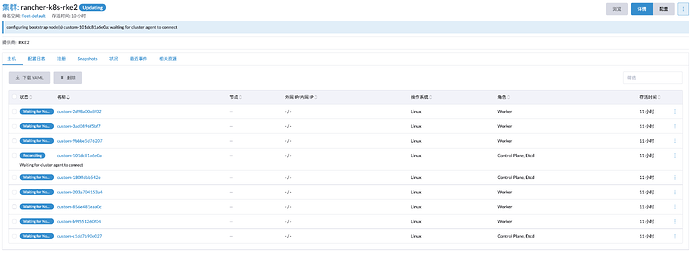

下游集群信息

- Kubernetes 版本: v1.28.9+rke2r1

- Cluster Type (Local/Downstream): Downstream

- 如果 Downstream,是什么类型的集群?(自定义/导入或为托管 等): 自定义

用户信息

- 登录用户的角色是什么? (管理员/集群所有者/集群成员/项目所有者/项目成员/自定义):admin

- 如果自定义,自定义权限集:admin

主机操作系统:

Oracle Linux Server release 8.9

问题描述:

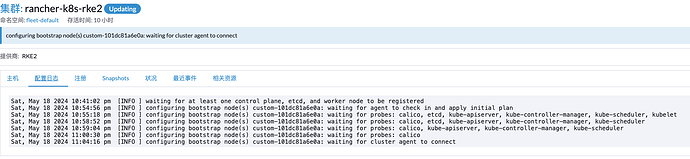

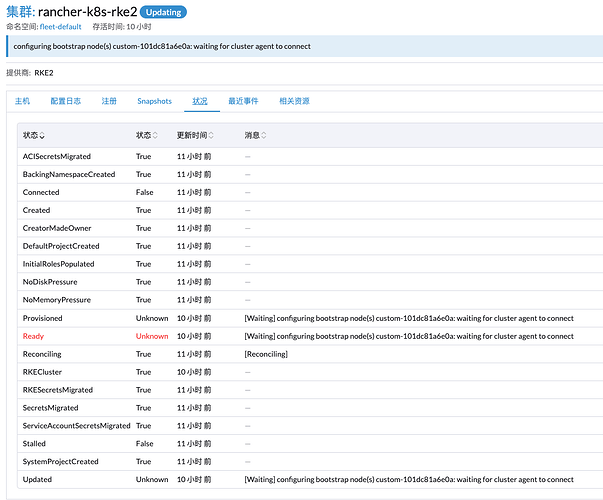

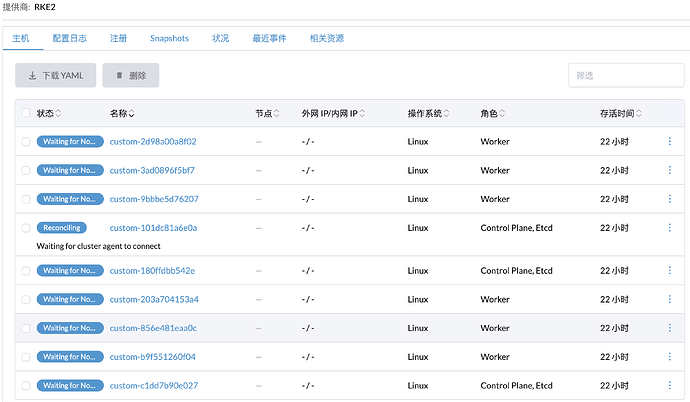

新建集群一直未成功。

重现步骤:

都是默认值

结果:

预期结果:

截图:

其他上下文信息:

日志

Master:

[root@rancher-k8s-m01 ~]# journalctl -eu rancher-system-agent -f

-- Logs begin at Sat 2024-05-18 22:13:05 CST. --

May 18 22:35:23 rancher-k8s-m01 systemd[1]: Started Rancher System Agent.

May 18 22:35:23 rancher-k8s-m01 rancher-system-agent[1807]: time="2024-05-18T22:35:23+08:00" level=info msg="Rancher System Agent version v0.3.6 (41c07d0) is starting"

May 18 22:35:23 rancher-k8s-m01 rancher-system-agent[1807]: time="2024-05-18T22:35:23+08:00" level=info msg="Using directory /var/lib/rancher/agent/work for work"

May 18 22:35:23 rancher-k8s-m01 rancher-system-agent[1807]: time="2024-05-18T22:35:23+08:00" level=info msg="Starting remote watch of plans"

May 18 22:35:23 rancher-k8s-m01 rancher-system-agent[1807]: time="2024-05-18T22:35:23+08:00" level=info msg="Starting /v1, Kind=Secret controller"

[root@rancher-k8s-m01 ~]# journalctl -xefu rancher-system-agent.service

-- Logs begin at Sat 2024-05-18 22:13:05 CST. --

May 18 22:35:23 rancher-k8s-m01 systemd[1]: Started Rancher System Agent.

-- Subject: Unit rancher-system-agent.service has finished start-up

-- Defined-By: systemd

-- Support: https://support.oracle.com

--

-- Unit rancher-system-agent.service has finished starting up.

--

-- The start-up result is done.

May 18 22:35:23 rancher-k8s-m01 rancher-system-agent[1807]: time="2024-05-18T22:35:23+08:00" level=info msg="Rancher System Agent version v0.3.6 (41c07d0) is starting"

May 18 22:35:23 rancher-k8s-m01 rancher-system-agent[1807]: time="2024-05-18T22:35:23+08:00" level=info msg="Using directory /var/lib/rancher/agent/work for work"

May 18 22:35:23 rancher-k8s-m01 rancher-system-agent[1807]: time="2024-05-18T22:35:23+08:00" level=info msg="Starting remote watch of plans"

May 18 22:35:23 rancher-k8s-m01 rancher-system-agent[1807]: time="2024-05-18T22:35:23+08:00" level=info msg="Starting /v1, Kind=Secret controller"

[root@rancher-k8s-m01 ~]# systemctl status rancher-system-agent.service -l

● rancher-system-agent.service - Rancher System Agent

Loaded: loaded (/etc/systemd/system/rancher-system-agent.service; enabled; vendor preset: disabled)

Active: active (running) since Sat 2024-05-18 22:35:23 CST; 11h ago

Docs: https://www.rancher.com

Main PID: 1807 (rancher-system-)

Tasks: 12 (limit: 102046)

Memory: 36.8M

CGroup: /system.slice/rancher-system-agent.service

└─1807 /usr/local/bin/rancher-system-agent sentinel

May 18 22:35:23 rancher-k8s-m01 systemd[1]: Started Rancher System Agent.

May 18 22:35:23 rancher-k8s-m01 rancher-system-agent[1807]: time="2024-05-18T22:35:23+08:00" level=info msg="Rancher System Agent version v0.3.6 (41c07d0) is starting"

May 18 22:35:23 rancher-k8s-m01 rancher-system-agent[1807]: time="2024-05-18T22:35:23+08:00" level=info msg="Using directory /var/lib/rancher/agent/work for work"

May 18 22:35:23 rancher-k8s-m01 rancher-system-agent[1807]: time="2024-05-18T22:35:23+08:00" level=info msg="Starting remote watch of plans"

May 18 22:35:23 rancher-k8s-m01 rancher-system-agent[1807]: time="2024-05-18T22:35:23+08:00" level=info msg="Starting /v1, Kind=Secret controller"

Worker:

[root@rancher-k8s-w01 ~]# journalctl -eu rancher-system-agent -f

-- Logs begin at Sat 2024-05-18 22:15:51 CST. --

May 18 22:36:55 rancher-k8s-w01 systemd[1]: Started Rancher System Agent.

May 18 22:36:55 rancher-k8s-w01 rancher-system-agent[1807]: time="2024-05-18T22:36:55+08:00" level=info msg="Rancher System Agent version v0.3.6 (41c07d0) is starting"

May 18 22:36:55 rancher-k8s-w01 rancher-system-agent[1807]: time="2024-05-18T22:36:55+08:00" level=info msg="Using directory /var/lib/rancher/agent/work for work"

May 18 22:36:55 rancher-k8s-w01 rancher-system-agent[1807]: time="2024-05-18T22:36:55+08:00" level=info msg="Starting remote watch of plans"

May 18 22:36:56 rancher-k8s-w01 rancher-system-agent[1807]: time="2024-05-18T22:36:56+08:00" level=info msg="Starting /v1, Kind=Secret controller"

^Z

[1]+ Stopped journalctl -eu rancher-system-agent -f

[root@rancher-k8s-w01 ~]# journalctl -xefu rancher-system-agent.service

-- Logs begin at Sat 2024-05-18 22:15:51 CST. --

May 18 22:36:55 rancher-k8s-w01 systemd[1]: Started Rancher System Agent.

-- Subject: Unit rancher-system-agent.service has finished start-up

-- Defined-By: systemd

-- Support: https://support.oracle.com

--

-- Unit rancher-system-agent.service has finished starting up.

--

-- The start-up result is done.

May 18 22:36:55 rancher-k8s-w01 rancher-system-agent[1807]: time="2024-05-18T22:36:55+08:00" level=info msg="Rancher System Agent version v0.3.6 (41c07d0) is starting"

May 18 22:36:55 rancher-k8s-w01 rancher-system-agent[1807]: time="2024-05-18T22:36:55+08:00" level=info msg="Using directory /var/lib/rancher/agent/work for work"

May 18 22:36:55 rancher-k8s-w01 rancher-system-agent[1807]: time="2024-05-18T22:36:55+08:00" level=info msg="Starting remote watch of plans"

May 18 22:36:56 rancher-k8s-w01 rancher-system-agent[1807]: time="2024-05-18T22:36:56+08:00" level=info msg="Starting /v1, Kind=Secret controller"

^Z

[2]+ Stopped journalctl -xefu rancher-system-agent.service

[root@rancher-k8s-w01 ~]# systemctl status rancher-system-agent.service -l

● rancher-system-agent.service - Rancher System Agent

Loaded: loaded (/etc/systemd/system/rancher-system-agent.service; enabled; vendor preset: disabled)

Active: active (running) since Sat 2024-05-18 22:36:55 CST; 11h ago

Docs: https://www.rancher.com

Main PID: 1807 (rancher-system-)

Tasks: 13 (limit: 102046)

Memory: 36.2M

CGroup: /system.slice/rancher-system-agent.service

└─1807 /usr/local/bin/rancher-system-agent sentinel

May 18 22:36:55 rancher-k8s-w01 systemd[1]: Started Rancher System Agent.

May 18 22:36:55 rancher-k8s-w01 rancher-system-agent[1807]: time="2024-05-18T22:36:55+08:00" level=info msg="Rancher System Agent version v0.3.6 (41c07d0) is starting"

May 18 22:36:55 rancher-k8s-w01 rancher-system-agent[1807]: time="2024-05-18T22:36:55+08:00" level=info msg="Using directory /var/lib/rancher/agent/work for work"

May 18 22:36:55 rancher-k8s-w01 rancher-system-agent[1807]: time="2024-05-18T22:36:55+08:00" level=info msg="Starting remote watch of plans"

May 18 22:36:56 rancher-k8s-w01 rancher-system-agent[1807]: time="2024-05-18T22:36:56+08:00" level=info msg="Starting /v1, Kind=Secret controller"

Rancher:

[root@k3s1-lb ~]# kubectl logs -f -l app=rancher -n cattle-system

W0519 02:19:36.105141 34 transport.go:301] Unable to cancel request for *client.addQuery

W0519 02:19:36.105196 34 transport.go:301] Unable to cancel request for *client.addQuery

2024/05/19 02:20:02 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

2024/05/19 02:22:02 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

2024/05/19 02:24:02 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

W0519 02:24:07.982224 34 transport.go:301] Unable to cancel request for *client.addQuery

W0519 02:24:12.266114 34 transport.go:301] Unable to cancel request for *client.addQuery

W0519 02:24:35.911570 34 transport.go:301] Unable to cancel request for *client.addQuery

W0519 02:24:35.922906 34 transport.go:301] Unable to cancel request for *client.addQuery

2024/05/19 02:26:02 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

W0519 02:22:52.108637 33 transport.go:301] Unable to cancel request for *client.addQuery

W0519 02:22:52.111345 33 transport.go:301] Unable to cancel request for *client.addQuery

W0519 02:22:52.111708 33 transport.go:301] Unable to cancel request for *client.addQuery

W0519 02:22:52.113049 33 transport.go:301] Unable to cancel request for *client.addQuery

W0519 02:22:52.114068 33 transport.go:301] Unable to cancel request for *client.addQuery

2024/05/19 02:08:00 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

2024/05/19 02:10:00 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

2024/05/19 02:12:00 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

2024/05/19 02:14:00 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

2024/05/19 02:16:00 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

2024/05/19 02:18:00 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

2024/05/19 02:20:00 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

W0519 02:22:52.114835 33 transport.go:301] Unable to cancel request for *client.addQuery

W0519 02:23:55.437655 33 warnings.go:80] cluster.x-k8s.io/v1alpha3 Machine is deprecated; use cluster.x-k8s.io/v1beta1 Machine

2024/05/19 02:24:39 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

W0519 02:25:12.856881 33 warnings.go:80] cluster.x-k8s.io/v1alpha3 MachineHealthCheck is deprecated; use cluster.x-k8s.io/v1beta1 MachineHealthCheck

W0519 02:25:36.873816 33 warnings.go:80] cluster.x-k8s.io/v1alpha3 Cluster is deprecated; use cluster.x-k8s.io/v1beta1 Cluster

2024/05/19 02:22:00 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

2024/05/19 02:24:00 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

2024/05/19 02:26:00 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

2024/05/19 02:26:39 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

W0519 02:27:47.944821 33 warnings.go:80] cluster.x-k8s.io/v1alpha3 MachineDeployment is deprecated; use cluster.x-k8s.io/v1beta1 MachineDeployment

W0519 02:28:00.862572 33 warnings.go:80] cluster.x-k8s.io/v1alpha3 MachineSet is deprecated; use cluster.x-k8s.io/v1beta1 MachineSet

2024/05/19 02:28:02 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing

2024/05/19 02:28:00 [ERROR] error syncing '_all_': handler user-controllers-controller: userControllersController: failed to set peers for key _all_: failed to start user controllers for cluster c-m-wxhvwlnf: ClusterUnavailable 503: cluster not found, requeuing