RKE 版本:

rke version v1.2.21

Docker 版本: (docker version,docker info)

Client: Docker Engine - Community

Version: 20.10.21

API version: 1.41

Go version: go1.18.7

Git commit: baeda1f

Built: Tue Oct 25 18:04:24 2022

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.21

API version: 1.41 (minimum version 1.12)

Go version: go1.18.7

Git commit: 3056208

Built: Tue Oct 25 18:02:38 2022

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.9

GitCommit: 1c90a442489720eec95342e1789ee8a5e1b9536f

runc:

Version: 1.1.4

GitCommit: v1.1.4-0-g5fd4c4d

docker-init:

Version: 0.19.0

GitCommit: de40ad0

操作系统和内核: (cat /etc/os-release, uname -r)

5.4.224-1.el7.elrepo.x86_64

主机类型和供应商: (移动云)

nodes:

- address: 192.168.1.7

port: "22"

internal_address: ""

role:

- controlplane

- worker

- etcd

hostname_override: ""

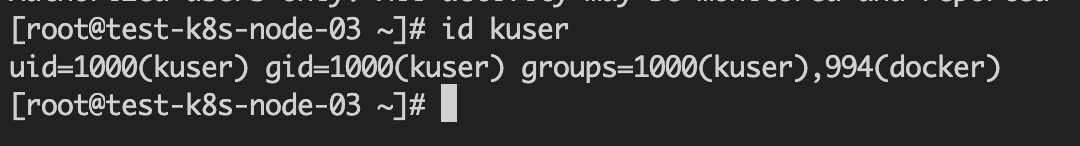

user: kuser

docker_socket: /var/run/docker.sock

ssh_key: ""

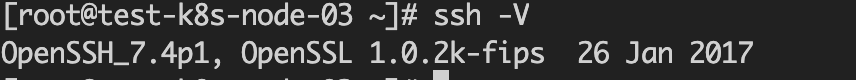

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 192.168.1.16

port: "22"

internal_address: ""

role:

- controlplane

- worker

- etcd

hostname_override: ""

user: kuser

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 192.168.1.24

port: "22"

internal_address: ""

role:

- controlplane

- worker

- etcd

hostname_override: ""

user: kuser

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

services:

etcd:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

external_urls: []

ca_cert: ""

cert: ""

key: ""

path: ""

uid: 0

gid: 0

snapshot: null

retention: ""

creation: ""

backup_config: null

kube-api:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

service_cluster_ip_range: 10.43.0.0/16

service_node_port_range: ""

pod_security_policy: false

always_pull_images: false

secrets_encryption_config: null

audit_log: null

admission_configuration: null

event_rate_limit: null

kube-controller:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

cluster_cidr: 10.42.0.0/16

service_cluster_ip_range: 10.43.0.0/16

scheduler:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

kubelet:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

cluster_domain: cluster.local

infra_container_image: ""

cluster_dns_server: 10.43.0.10

fail_swap_on: false

generate_serving_certificate: false

kubeproxy:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

network:

plugin: canal

options: {}

mtu: 0

node_selector: {}

update_strategy: null

tolerations: []

authentication:

strategy: x509

sans: []

webhook: null

addons: ""

addons_include: []

system_images:

etcd: rancher/mirrored-coreos-etcd:v3.4.15-rancher1

alpine: rancher/rke-tools:v0.1.80

nginx_proxy: rancher/rke-tools:v0.1.80

cert_downloader: rancher/rke-tools:v0.1.80

kubernetes_services_sidecar: rancher/rke-tools:v0.1.80

kubedns: rancher/mirrored-k8s-dns-kube-dns:1.15.10

dnsmasq: rancher/mirrored-k8s-dns-dnsmasq-nanny:1.15.10

kubedns_sidecar: rancher/mirrored-k8s-dns-sidecar:1.15.10

kubedns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.1

coredns: rancher/mirrored-coredns-coredns:1.8.0

coredns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.1

nodelocal: rancher/mirrored-k8s-dns-node-cache:1.15.13

kubernetes: rancher/hyperkube:v1.20.15-rancher1

flannel: rancher/mirrored-coreos-flannel:v0.15.1

flannel_cni: rancher/flannel-cni:v0.3.0-rancher6

calico_node: rancher/mirrored-calico-node:v3.17.2

calico_cni: rancher/mirrored-calico-cni:v3.17.2

calico_controllers: rancher/mirrored-calico-kube-controllers:v3.17.2

calico_ctl: rancher/mirrored-calico-ctl:v3.17.2

calico_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.17.2

canal_node: rancher/mirrored-calico-node:v3.17.2

canal_cni: rancher/mirrored-calico-cni:v3.17.2

canal_controllers: rancher/mirrored-calico-kube-controllers:v3.17.2

canal_flannel: rancher/mirrored-coreos-flannel:v0.15.1

canal_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.17.2

weave_node: weaveworks/weave-kube:2.8.1

weave_cni: weaveworks/weave-npc:2.8.1

pod_infra_container: rancher/mirrored-pause:3.6

ingress: rancher/nginx-ingress-controller:nginx-1.2.1-rancher1

ingress_backend: rancher/mirrored-nginx-ingress-controller-defaultbackend:1.5-rancher1

ingress_webhook: rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.1.1

metrics_server: rancher/mirrored-metrics-server:v0.5.0

windows_pod_infra_container: rancher/mirrored-pause:3.6

aci_cni_deploy_container: noiro/cnideploy:5.1.1.0.1ae238a

aci_host_container: noiro/aci-containers-host:5.1.1.0.1ae238a

aci_opflex_container: noiro/opflex:5.1.1.0.1ae238a

aci_mcast_container: noiro/opflex:5.1.1.0.1ae238a

aci_ovs_container: noiro/openvswitch:5.1.1.0.1ae238a

aci_controller_container: noiro/aci-containers-controller:5.1.1.0.1ae238a

aci_gbp_server_container: noiro/gbp-server:5.1.1.0.1ae238a

aci_opflex_server_container: noiro/opflex-server:5.1.1.0.1ae238a

ssh_key_path: ~/.ssh/id_rsa

ssh_cert_path: ""

ssh_agent_auth: false

authorization:

mode: rbac

options: {}

ignore_docker_version: null

kubernetes_version: ""

private_registries: []

ingress:

provider: ""

options: {}

node_selector: {}

extra_args: {}

dns_policy: ""

extra_envs: []

extra_volumes: []

extra_volume_mounts: []

update_strategy: null

http_port: 0

https_port: 0

network_mode: ""

tolerations: []

default_backend: null

default_http_backend_priority_class_name: ""

nginx_ingress_controller_priority_class_name: ""

cluster_name: ""

cloud_provider:

name: ""

prefix_path: ""

win_prefix_path: ""

addon_job_timeout: 0

bastion_host:

address: ""

port: ""

user: ""

ssh_key: ""

ssh_key_path: ""

ssh_cert: ""

ssh_cert_path: ""

monitoring:

provider: ""

options: {}

node_selector: {}

update_strategy: null

replicas: null

tolerations: []

metrics_server_priority_class_name: ""

restore:

restore: false

snapshot_name: ""

rotate_encryption_key: false

dns: null

重现步骤:

./rke up --config ./cluster.yml

结果:

ERRO[0214] Host 192.168.1.7 failed to report Ready status with error: [controlplane] Error getting node 192.168.1.7: "192.168.1.7" not found

INFO[0214] [controlplane] Now checking status of node 192.168.1.16, try #1

ERRO[0239] Host 192.168.1.16 failed to report Ready status with error: [controlplane] Error getting node 192.168.1.16: "192.168.1.16" not found

INFO[0239] [controlplane] Now checking status of node 192.168.1.24, try #1

ERRO[0264] Host 192.168.1.24 failed to report Ready status with error: [controlplane] Error getting node 192.168.1.24: "192.168.1.24" not found

INFO[0264] [controlplane] Processing controlplane hosts for upgrade 1 at a time

INFO[0264] Processing controlplane host 192.168.1.7

INFO[0264] [controlplane] Now checking status of node 192.168.1.7, try #1

ERRO[0289] Failed to upgrade hosts: 192.168.1.7 with error [[controlplane] Error getting node 192.168.1.7: "192.168.1.7" not found]

FATA[0289] [controlPlane] Failed to upgrade Control Plane: [[[controlplane] Error getting node 192.168.1.7: "192.168.1.7" not found]]