RKE 版本:

rke version v1.4.2

Docker 版本: (docker version,docker info)

Client:

Version: 1.13.1

API version: 1.26

Package version: docker-1.13.1-209.git7d71120.el7.centos.x86_64

Go version: go1.10.3

Git commit: 7d71120/1.13.1

Built: Wed Mar 2 15:25:43 2022

OS/Arch: linux/amd64

Server:

Version: 1.13.1

API version: 1.26 (minimum version 1.12)

Package version: docker-1.13.1-209.git7d71120.el7.centos.x86_64

Go version: go1.10.3

Git commit: 7d71120/1.13.1

Built: Wed Mar 2 15:25:43 2022

OS/Arch: linux/amd64

Experimental: false

操作系统和内核: (cat /etc/os-release, uname -r)

CentOS Linux release 7.9.2009 (Core)

主机类型和供应商: (VirtualBox/Bare-metal/AWS/GCE/DO)

VMware

cluster.yml 文件:

nodes:

- address: 10.2.109.116

port: "22"

internal_address: ""

role:

- controlplane

- etcd

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 10.2.109.167

port: "22"

internal_address: ""

role:

- worker

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 10.2.109.133

port: "22"

internal_address: ""

role:

- worker

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 10.2.109.224

port: "22"

internal_address: ""

role:

- worker

- etcd

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

services:

etcd:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

external_urls: []

ca_cert: ""

cert: ""

key: ""

path: ""

uid: 0

gid: 0

snapshot: null

retention: ""

creation: ""

backup_config: null

kube-api:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

service_cluster_ip_range: 10.43.0.0/16

service_node_port_range: ""

pod_security_policy: false

always_pull_images: false

secrets_encryption_config: null

audit_log: null

admission_configuration: null

event_rate_limit: null

kube-controller:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

cluster_cidr: 10.42.0.0/16

service_cluster_ip_range: 10.43.0.0/16

scheduler:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

kubelet:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

cluster_domain: cluster.local

infra_container_image: ""

cluster_dns_server: 10.43.0.10

fail_swap_on: false

generate_serving_certificate: false

kubeproxy:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

network:

plugin: canal

options: {}

mtu: 0

node_selector: {}

update_strategy: null

tolerations: []

authentication:

strategy: x509

sans: []

webhook: null

addons: ""

addons_include: []

system_images:

etcd: rancher/mirrored-coreos-etcd:v3.5.4

alpine: rancher/rke-tools:v0.1.88

nginx_proxy: rancher/rke-tools:v0.1.88

cert_downloader: rancher/rke-tools:v0.1.88

kubernetes_services_sidecar: rancher/rke-tools:v0.1.88

kubedns: rancher/mirrored-k8s-dns-kube-dns:1.21.1

dnsmasq: rancher/mirrored-k8s-dns-dnsmasq-nanny:1.21.1

kubedns_sidecar: rancher/mirrored-k8s-dns-sidecar:1.21.1

kubedns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.5

coredns: rancher/mirrored-coredns-coredns:1.9.3

coredns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.5

nodelocal: rancher/mirrored-k8s-dns-node-cache:1.21.1

kubernetes: rancher/hyperkube:v1.24.9-rancher1

flannel: rancher/mirrored-coreos-flannel:v0.15.1

flannel_cni: rancher/flannel-cni:v0.3.0-rancher6

calico_node: rancher/mirrored-calico-node:v3.22.5

calico_cni: rancher/calico-cni:v3.22.5-rancher1

calico_controllers: rancher/mirrored-calico-kube-controllers:v3.22.5

calico_ctl: rancher/mirrored-calico-ctl:v3.22.5

calico_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.22.5

canal_node: rancher/mirrored-calico-node:v3.22.5

canal_cni: rancher/calico-cni:v3.22.5-rancher1

canal_controllers: rancher/mirrored-calico-kube-controllers:v3.22.5

canal_flannel: rancher/mirrored-flannelcni-flannel:v0.17.0

canal_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.22.5

weave_node: weaveworks/weave-kube:2.8.1

weave_cni: weaveworks/weave-npc:2.8.1

pod_infra_container: rancher/mirrored-pause:3.6

ingress: rancher/nginx-ingress-controller:nginx-1.2.1-rancher1

ingress_backend: rancher/mirrored-nginx-ingress-controller-defaultbackend:1.5-rancher1

ingress_webhook: rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.1.1

metrics_server: rancher/mirrored-metrics-server:v0.6.1

windows_pod_infra_container: rancher/mirrored-pause:3.6

aci_cni_deploy_container: noiro/cnideploy:5.2.3.5.1d150da

aci_host_container: noiro/aci-containers-host:5.2.3.5.1d150da

aci_opflex_container: noiro/opflex:5.2.3.5.1d150da

aci_mcast_container: noiro/opflex:5.2.3.5.1d150da

aci_ovs_container: noiro/openvswitch:5.2.3.5.1d150da

aci_controller_container: noiro/aci-containers-controller:5.2.3.5.1d150da

aci_gbp_server_container: noiro/gbp-server:5.2.3.5.1d150da

aci_opflex_server_container: noiro/opflex-server:5.2.3.5.1d150da

ssh_key_path: ~/.ssh/id_rsa

ssh_cert_path: ""

ssh_agent_auth: false

authorization:

mode: rbac

options: {}

ignore_docker_version: null

enable_cri_dockerd: null

kubernetes_version: ""

private_registries: []

ingress:

provider: ""

options: {}

node_selector: {}

extra_args: {}

dns_policy: ""

extra_envs: []

extra_volumes: []

extra_volume_mounts: []

update_strategy: null

http_port: 0

https_port: 0

network_mode: ""

tolerations: []

default_backend: null

default_http_backend_priority_class_name: ""

nginx_ingress_controller_priority_class_name: ""

default_ingress_class: null

cluster_name: ""

cloud_provider:

name: ""

prefix_path: ""

win_prefix_path: ""

addon_job_timeout: 0

bastion_host:

address: ""

port: ""

user: ""

ssh_key: ""

ssh_key_path: ""

ssh_cert: ""

ssh_cert_path: ""

ignore_proxy_env_vars: false

monitoring:

provider: ""

options: {}

node_selector: {}

update_strategy: null

replicas: null

tolerations: []

metrics_server_priority_class_name: ""

restore:

restore: false

snapshot_name: ""

rotate_encryption_key: false

dns: null

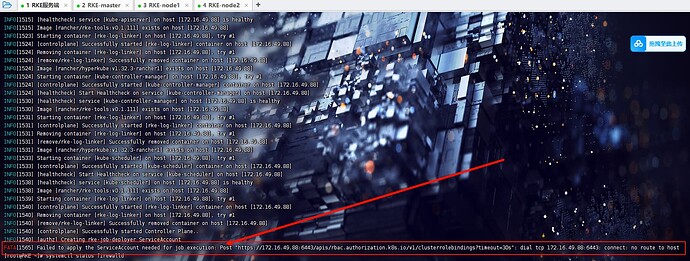

重现步骤:

rke up

结果:

INFO[0000] Running RKE version: v1.4.2

INFO[0000] Initiating Kubernetes cluster

INFO[0000] [certificates] GenerateServingCertificate is disabled, checking if there are unused kubelet certificates

INFO[0000] [certificates] Generating admin certificates and kubeconfig

INFO[0000] Successfully Deployed state file at [./cluster.rkestate]

INFO[0000] Building Kubernetes cluster

INFO[0000] [dialer] Setup tunnel for host [10.2.109.133]

INFO[0000] [dialer] Setup tunnel for host [10.2.109.224]

INFO[0000] [dialer] Setup tunnel for host [10.2.109.167]

INFO[0000] [dialer] Setup tunnel for host [10.2.109.116]

WARN[0000] Failed to set up SSH tunneling for host [10.2.109.116]: Can't retrieve Docker Info: error during connect: Get "http://%2Fvar%2Frun%2Fdocker.sock/v1.24/info": Unable to access node with address [10.2.109.116:22] using SSH. Please check if you are able to SSH to the node using the specified SSH Private Key and if you have configured the correct SSH username. Error: ssh: handshake failed: ssh: unable to authenticate, attempted methods [none publickey], no supported methods remain

WARN[0000] Removing host [10.2.109.116] from node lists

INFO[0000] [network] Deploying port listener containers

INFO[0000] Image [rancher/rke-tools:v0.1.88] exists on host [10.2.109.224]

INFO[0002] Starting container [rke-etcd-port-listener] on host [10.2.109.224], try #1

INFO[0004] [network] Successfully started [rke-etcd-port-listener] container on host [10.2.109.224]

INFO[0004] Image [rancher/rke-tools:v0.1.88] exists on host [10.2.109.224]

INFO[0004] Image [rancher/rke-tools:v0.1.88] exists on host [10.2.109.133]

INFO[0004] Image [rancher/rke-tools:v0.1.88] exists on host [10.2.109.167]

INFO[0004] Starting container [rke-worker-port-listener] on host [10.2.109.133], try #1

INFO[0005] [network] Successfully started [rke-worker-port-listener] container on host [10.2.109.133]

INFO[0005] Starting container [rke-worker-port-listener] on host [10.2.109.224], try #1

INFO[0005] Starting container [rke-worker-port-listener] on host [10.2.109.167], try #1

INFO[0006] [network] Successfully started [rke-worker-port-listener] container on host [10.2.109.167]

INFO[0006] [network] Successfully started [rke-worker-port-listener] container on host [10.2.109.224]

INFO[0006] [network] Port listener containers deployed successfully

INFO[0006] [network] Running control plane -> etcd port checks

INFO[0006] [network] Running control plane -> worker port checks

INFO[0006] [network] Running workers -> control plane port checks

INFO[0006] [network] Checking if host [10.2.109.167] can connect to host(s) [] on port(s) [6443], try #1

INFO[0006] [network] Checking if host [10.2.109.133] can connect to host(s) [] on port(s) [6443], try #1

INFO[0006] [network] Checking if host [10.2.109.224] can connect to host(s) [] on port(s) [6443], try #1

INFO[0006] Image [rancher/rke-tools:v0.1.88] exists on host [10.2.109.133]

INFO[0006] Image [rancher/rke-tools:v0.1.88] exists on host [10.2.109.224]

INFO[0006] Image [rancher/rke-tools:v0.1.88] exists on host [10.2.109.167]

INFO[0007] Starting container [rke-port-checker] on host [10.2.109.133], try #1

INFO[0007] [network] Successfully started [rke-port-checker] container on host [10.2.109.133]

INFO[0007] Removing container [rke-port-checker] on host [10.2.109.133], try #1

INFO[0007] Starting container [rke-port-checker] on host [10.2.109.167], try #1

INFO[0007] [network] Successfully started [rke-port-checker] container on host [10.2.109.167]

INFO[0007] Removing container [rke-port-checker] on host [10.2.109.167], try #1

INFO[0008] Starting container [rke-port-checker] on host [10.2.109.224], try #1

INFO[0008] [network] Successfully started [rke-port-checker] container on host [10.2.109.224]

INFO[0009] Removing container [rke-port-checker] on host [10.2.109.224], try #1

INFO[0009] [network] Checking KubeAPI port Control Plane hosts

INFO[0009] [network] Removing port listener containers

INFO[0009] Removing container [rke-etcd-port-listener] on host [10.2.109.224], try #1

INFO[0010] [remove/rke-etcd-port-listener] Successfully removed container on host [10.2.109.224]

INFO[0010] Removing container [rke-worker-port-listener] on host [10.2.109.133], try #1

INFO[0010] Removing container [rke-worker-port-listener] on host [10.2.109.224], try #1

INFO[0010] Removing container [rke-worker-port-listener] on host [10.2.109.167], try #1

INFO[0010] [remove/rke-worker-port-listener] Successfully removed container on host [10.2.109.133]

INFO[0010] [remove/rke-worker-port-listener] Successfully removed container on host [10.2.109.167]

INFO[0011] [remove/rke-worker-port-listener] Successfully removed container on host [10.2.109.224]

INFO[0011] [network] Port listener containers removed successfully

INFO[0011] [certificates] Deploying kubernetes certificates to Cluster nodes

INFO[0011] Finding container [cert-deployer] on host [10.2.109.167], try #1

INFO[0011] Finding container [cert-deployer] on host [10.2.109.224], try #1

INFO[0011] Finding container [cert-deployer] on host [10.2.109.133], try #1

INFO[0011] Image [rancher/rke-tools:v0.1.88] exists on host [10.2.109.224]

INFO[0011] Image [rancher/rke-tools:v0.1.88] exists on host [10.2.109.167]

INFO[0011] Image [rancher/rke-tools:v0.1.88] exists on host [10.2.109.133]

INFO[0011] Starting container [cert-deployer] on host [10.2.109.133], try #1

INFO[0012] Starting container [cert-deployer] on host [10.2.109.224], try #1

INFO[0012] Starting container [cert-deployer] on host [10.2.109.167], try #1

INFO[0012] Finding container [cert-deployer] on host [10.2.109.133], try #1

INFO[0012] Finding container [cert-deployer] on host [10.2.109.167], try #1

INFO[0012] Finding container [cert-deployer] on host [10.2.109.224], try #1

INFO[0017] Finding container [cert-deployer] on host [10.2.109.133], try #1

INFO[0017] Removing container [cert-deployer] on host [10.2.109.133], try #1

INFO[0017] Finding container [cert-deployer] on host [10.2.109.167], try #1

INFO[0017] Removing container [cert-deployer] on host [10.2.109.167], try #1

INFO[0017] Finding container [cert-deployer] on host [10.2.109.224], try #1

INFO[0017] Removing container [cert-deployer] on host [10.2.109.224], try #1

INFO[0018] [certificates] Successfully deployed kubernetes certificates to Cluster nodes

INFO[0018] [/etc/kubernetes/audit-policy.yaml] Successfully deployed audit policy file to Cluster control nodes

INFO[0018] [reconcile] Reconciling cluster state

INFO[0018] [reconcile] This is newly generated cluster

INFO[0018] Pre-pulling kubernetes images

INFO[0018] Pulling image [rancher/hyperkube:v1.24.9-rancher1] on host [10.2.109.167], try #1

INFO[0018] Pulling image [rancher/hyperkube:v1.24.9-rancher1] on host [10.2.109.133], try #1

INFO[0018] Pulling image [rancher/hyperkube:v1.24.9-rancher1] on host [10.2.109.224], try #1

INFO[0067] Image [rancher/hyperkube:v1.24.9-rancher1] exists on host [10.2.109.133]

INFO[0079] Image [rancher/hyperkube:v1.24.9-rancher1] exists on host [10.2.109.224]

INFO[0079] Image [rancher/hyperkube:v1.24.9-rancher1] exists on host [10.2.109.167]

INFO[0079] Kubernetes images pulled successfully

FATA[0079] failed to initialize new kubernetes client: stat ./kube_config_cluster.yml: no such file or directory