Rancher Server 设置

- Rancher 版本:v2.6.8

- 安装选项 (Docker install/Helm Chart): Helm Chart

- 如果是 Helm Chart 安装,需要提供 Local 集群的类型(RKE1, RKE2, k3s, EKS, 等)和版本:RKE1

- 在线或离线部署:在线

下游集群信息

- Kubernetes 版本: v1.20.10

- Cluster Type (Local/Downstream): Downstream

- 如果 Downstream,是什么类型的集群?(自定义/导入或为托管 等): 自定义

用户信息

- 登录用户的角色是什么? (管理员/集群所有者/集群成员/项目所有者/项目成员/自定义):管理员

- 如果自定义,自定义权限集:

主机操作系统:

CentOS7.9

问题描述:

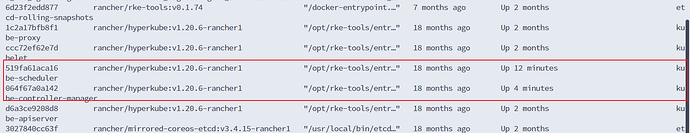

RKE1的kube-scheduler和kube-controller-manager频繁重启;这两个服务一直在重启,local集群也有这个问题,并且偶尔导致访问rancher报502或者503,local集群是部署的RKE1 3节点的高可用集群,3个节点都会重启。

重现步骤:

结果:

预期结果:

截图:

其他上下文信息:

日志

** controller-manager日志 **

I1123 01:54:36.164600 1 flags.go:59] FLAG: --feature-gates=""

I1123 01:54:36.164639 1 flags.go:59] FLAG: --flex-volume-plugin-dir="/usr/libexec/kubernetes/kubelet-plugins/volume/exec/"

I1123 01:54:36.164661 1 flags.go:59] FLAG: --help="false"

I1123 01:54:36.164681 1 flags.go:59] FLAG: --horizontal-pod-autoscaler-cpu-initialization-period="5m0s"

I1123 01:54:36.164700 1 flags.go:59] FLAG: --horizontal-pod-autoscaler-downscale-delay="5m0s"

I1123 01:54:36.164719 1 flags.go:59] FLAG: --horizontal-pod-autoscaler-downscale-stabilization="5m0s"

I1123 01:54:36.164738 1 flags.go:59] FLAG: --horizontal-pod-autoscaler-initial-readiness-delay="30s"

I1123 01:54:36.164757 1 flags.go:59] FLAG: --horizontal-pod-autoscaler-sync-period="15s"

I1123 01:54:36.164775 1 flags.go:59] FLAG: --horizontal-pod-autoscaler-tolerance="0.1"

I1123 01:54:36.164809 1 flags.go:59] FLAG: --horizontal-pod-autoscaler-upscale-delay="3m0s"

I1123 01:54:36.164828 1 flags.go:59] FLAG: --horizontal-pod-autoscaler-use-rest-clients="true"

I1123 01:54:36.164846 1 flags.go:59] FLAG: --http2-max-streams-per-connection="0"

I1123 01:54:36.164879 1 flags.go:59] FLAG: --kube-api-burst="30"

I1123 01:54:36.164893 1 flags.go:59] FLAG: --kube-api-content-type="application/vnd.kubernetes.protobuf"

I1123 01:54:36.164912 1 flags.go:59] FLAG: --kube-api-qps="20"

I1123 01:54:36.164940 1 flags.go:59] FLAG: --kubeconfig="/etc/kubernetes/ssl/kubecfg-kube-controller-manager.yaml"

I1123 01:54:36.164962 1 flags.go:59] FLAG: --large-cluster-size-threshold="50"

I1123 01:54:36.164979 1 flags.go:59] FLAG: --leader-elect="true"

I1123 01:54:36.165000 1 flags.go:59] FLAG: --leader-elect-lease-duration="15s"

I1123 01:54:36.165024 1 flags.go:59] FLAG: --leader-elect-renew-deadline="10s"

I1123 01:54:36.165045 1 flags.go:59] FLAG: --leader-elect-resource-lock="leases"

I1123 01:54:36.165101 1 flags.go:59] FLAG: --leader-elect-resource-name="kube-controller-manager"

I1123 01:54:36.165125 1 flags.go:59] FLAG: --leader-elect-resource-namespace="kube-system"

I1123 01:54:36.165146 1 flags.go:59] FLAG: --leader-elect-retry-period="2s"

I1123 01:54:36.165167 1 flags.go:59] FLAG: --log-backtrace-at=":0"

I1123 01:54:36.165199 1 flags.go:59] FLAG: --log-dir=""

I1123 01:54:36.165217 1 flags.go:59] FLAG: --log-file=""

I1123 01:54:36.165242 1 flags.go:59] FLAG: --log-file-max-size="1800"

I1123 01:54:36.165263 1 flags.go:59] FLAG: --log-flush-frequency="5s"

I1123 01:54:36.165283 1 flags.go:59] FLAG: --logging-format="text"

I1123 01:54:36.165315 1 flags.go:59] FLAG: --logtostderr="true"

I1123 01:54:36.165336 1 flags.go:59] FLAG: --master=""

I1123 01:54:36.165357 1 flags.go:59] FLAG: --max-endpoints-per-slice="100"

I1123 01:54:36.165378 1 flags.go:59] FLAG: --min-resync-period="12h0m0s"

I1123 01:54:36.165404 1 flags.go:59] FLAG: --mirroring-concurrent-service-endpoint-syncs="5"

I1123 01:54:36.165424 1 flags.go:59] FLAG: --mirroring-endpointslice-updates-batch-period="0s"

I1123 01:54:36.165439 1 flags.go:59] FLAG: --mirroring-max-endpoints-per-subset="1000"

I1123 01:54:36.165460 1 flags.go:59] FLAG: --namespace-sync-period="5m0s"

I1123 01:54:36.165480 1 flags.go:59] FLAG: --node-cidr-mask-size="0"

I1123 01:54:36.165538 1 flags.go:59] FLAG: --node-cidr-mask-size-ipv4="0"

I1123 01:54:36.165555 1 flags.go:59] FLAG: --node-cidr-mask-size-ipv6="0"

I1123 01:54:36.165577 1 flags.go:59] FLAG: --node-eviction-rate="0.1"

I1123 01:54:36.165599 1 flags.go:59] FLAG: --node-monitor-grace-period="40s"

I1123 01:54:36.165619 1 flags.go:59] FLAG: --node-monitor-period="5s"

I1123 01:54:36.165644 1 flags.go:59] FLAG: --node-startup-grace-period="1m0s"

I1123 01:54:36.165675 1 flags.go:59] FLAG: --node-sync-period="0s"

I1123 01:54:36.165687 1 flags.go:59] FLAG: --one-output="false"

I1123 01:54:36.165700 1 flags.go:59] FLAG: --permit-port-sharing="false"

I1123 01:54:36.165721 1 flags.go:59] FLAG: --pod-eviction-timeout="5m0s"

I1123 01:54:36.165746 1 flags.go:59] FLAG: --port="10252"

I1123 01:54:36.165763 1 flags.go:59] FLAG: --profiling="false"

I1123 01:54:36.165775 1 flags.go:59] FLAG: --pv-recycler-increment-timeout-nfs="30"

I1123 01:54:36.165787 1 flags.go:59] FLAG: --pv-recycler-minimum-timeout-hostpath="60"

I1123 01:54:36.165800 1 flags.go:59] FLAG: --pv-recycler-minimum-timeout-nfs="300"

I1123 01:54:36.165827 1 flags.go:59] FLAG: --pv-recycler-pod-template-filepath-hostpath=""

I1123 01:54:36.165845 1 flags.go:59] FLAG: --pv-recycler-pod-template-filepath-nfs=""

I1123 01:54:36.165862 1 flags.go:59] FLAG: --pv-recycler-timeout-increment-hostpath="30"

I1123 01:54:36.165878 1 flags.go:59] FLAG: --pvclaimbinder-sync-period="15s"

I1123 01:54:36.165906 1 flags.go:59] FLAG: --register-retry-count="10"

I1123 01:54:36.165950 1 flags.go:59] FLAG: --requestheader-allowed-names="[]"

I1123 01:54:36.165995 1 flags.go:59] FLAG: --requestheader-client-ca-file=""

I1123 01:54:36.166024 1 flags.go:59] FLAG: --requestheader-extra-headers-prefix="[x-remote-extra-]"

I1123 01:54:36.166082 1 flags.go:59] FLAG: --requestheader-group-headers="[x-remote-group]"

I1123 01:54:36.166129 1 flags.go:59] FLAG: --requestheader-username-headers="[x-remote-user]"

I1123 01:54:36.166304 1 flags.go:59] FLAG: --resource-quota-sync-period="5m0s"

I1123 01:54:36.166326 1 flags.go:59] FLAG: --root-ca-file="/etc/kubernetes/ssl/kube-ca.pem"

I1123 01:54:36.166346 1 flags.go:59] FLAG: --route-reconciliation-period="10s"

I1123 01:54:36.166366 1 flags.go:59] FLAG: --secondary-node-eviction-rate="0.01"

I1123 01:54:36.166387 1 flags.go:59] FLAG: --secure-port="10257"

I1123 01:54:36.166404 1 flags.go:59] FLAG: --service-account-private-key-file="/etc/kubernetes/ssl/kube-service-account-token-key.pem"

I1123 01:54:36.166457 1 flags.go:59] FLAG: --service-cluster-ip-range="10.43.0.0/16"

I1123 01:54:36.166474 1 flags.go:59] FLAG: --show-hidden-metrics-for-version=""

I1123 01:54:36.166487 1 flags.go:59] FLAG: --skip-headers="false"

I1123 01:54:36.166527 1 flags.go:59] FLAG: --skip-log-headers="false"

I1123 01:54:36.166549 1 flags.go:59] FLAG: --stderrthreshold="2"

I1123 01:54:36.166569 1 flags.go:59] FLAG: --terminated-pod-gc-threshold="1000"

I1123 01:54:36.166589 1 flags.go:59] FLAG: --tls-cert-file=""

I1123 01:54:36.166606 1 flags.go:59] FLAG: --tls-cipher-suites="[]"

I1123 01:54:36.166679 1 flags.go:59] FLAG: --tls-min-version=""

I1123 01:54:36.166703 1 flags.go:59] FLAG: --tls-private-key-file=""

I1123 01:54:36.166723 1 flags.go:59] FLAG: --tls-sni-cert-key="[]"

I1123 01:54:36.166785 1 flags.go:59] FLAG: --unhealthy-zone-threshold="0.55"

I1123 01:54:36.166810 1 flags.go:59] FLAG: --use-service-account-credentials="true"

I1123 01:54:36.166828 1 flags.go:59] FLAG: --v="2"

I1123 01:54:36.166852 1 flags.go:59] FLAG: --version="false"

I1123 01:54:36.166889 1 flags.go:59] FLAG: --vmodule=""

I1123 01:54:36.166916 1 flags.go:59] FLAG: --volume-host-allow-local-loopback="true"

I1123 01:54:36.166935 1 flags.go:59] FLAG: --volume-host-cidr-denylist="[]"

I1123 01:54:38.147040 1 serving.go:331] Generated self-signed cert in-memory

W1123 01:54:39.753978 1 authentication.go:307] No authentication-kubeconfig provided in order to lookup client-ca-file in configmap/extension-apiserver-authentication in kube-system, so client certificate authentication won't work.

W1123 01:54:39.754134 1 authentication.go:331] No authentication-kubeconfig provided in order to lookup requestheader-client-ca-file in configmap/extension-apiserver-authentication in kube-system, so request-header client certificate authentication won't work.

W1123 01:54:39.754327 1 authorization.go:176] No authorization-kubeconfig provided, so SubjectAccessReview of authorization tokens won't work.

I1123 01:54:39.754722 1 controllermanager.go:176] Version: v1.20.6

I1123 01:54:39.758403 1 tlsconfig.go:200] loaded serving cert ["Generated self signed cert"]: "localhost@1669168478" [serving] validServingFor=[127.0.0.1,localhost,localhost] issuer="localhost-ca@1669168476" (2022-11-23 00:54:36 +0000 UTC to 2023-11-23 00:54:36 +0000 UTC (now=2022-11-23 01:54:39.758239878 +0000 UTC))

I1123 01:54:39.758968 1 named_certificates.go:53] loaded SNI cert [0/"self-signed loopback"]: "apiserver-loopback-client@1669168479" [serving] validServingFor=[apiserver-loopback-client] issuer="apiserver-loopback-client-ca@1669168479" (2022-11-23 00:54:38 +0000 UTC to 2023-11-23 00:54:38 +0000 UTC (now=2022-11-23 01:54:39.758941284 +0000 UTC))

I1123 01:54:39.759166 1 secure_serving.go:197] Serving securely on [::]:10257

I1123 01:54:39.759793 1 deprecated_insecure_serving.go:53] Serving insecurely on [::]:10252

I1123 01:54:39.759798 1 tlsconfig.go:240] Starting DynamicServingCertificateController

I1123 01:54:39.760162 1 leaderelection.go:243] attempting to acquire leader lease kube-system/kube-controller-manager...

E1123 02:04:05.609278 1 leaderelection.go:325] error retrieving resource lock kube-system/kube-controller-manager: Get "https://127.0.0.1:6443/apis/coordination.k8s.io/v1/namespaces/kube-system/leases/kube-controller-manager?timeout=10s": net/http: request canceled (Client.Timeout exceeded while awaiting headers)

** scheduler日志 **

goroutine 429 [sync.Cond.Wait, 2 minutes]:

runtime.goparkunlock(...)

/usr/local/go/src/runtime/proc.go:312

sync.runtime_notifyListWait(0xc0005ff640, 0x0)

/usr/local/go/src/runtime/sema.go:513 +0xf8

sync.(*Cond).Wait(0xc0005ff630)

/usr/local/go/src/sync/cond.go:56 +0x9d

k8s.io/kubernetes/vendor/golang.org/x/net/http2.(*pipe).Read(0xc0005ff628, 0xc00037f9c0, 0x4, 0x4, 0x0, 0x0, 0x0)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/golang.org/x/net/http2/pipe.go:65 +0x97

k8s.io/kubernetes/vendor/golang.org/x/net/http2.transportResponseBody.Read(0xc0005ff600, 0xc00037f9c0, 0x4, 0x4, 0x0, 0x0, 0x0)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/golang.org/x/net/http2/transport.go:2108 +0xaf

io.ReadAtLeast(0x7fbd1f4dc008, 0xc0005ff600, 0xc00037f9c0, 0x4, 0x4, 0x4, 0x203000, 0x203000, 0x203000)

/usr/local/go/src/io/io.go:314 +0x87

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/framer.(*lengthDelimitedFrameReader).Read(0xc000786fe0, 0xc000556000, 0x400, 0x400, 0xc000441918, 0x7fbd1f1e9b20, 0x38)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/framer/framer.go:76 +0x275

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/runtime/serializer/streaming.(*decoder).Decode(0xc0006af630, 0x0, 0x1fd0b40, 0xc000b645c0, 0xc000441a40, 0xa, 0x2, 0x0, 0x0)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/runtime/serializer/streaming/streaming.go:77 +0x89

k8s.io/kubernetes/vendor/k8s.io/client-go/rest/watch.(*Decoder).Decode(0xc000787000, 0xc000923f90, 0xc000943638, 0xc000923f80, 0xc000923f00, 0xc000923ee4, 0x3)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/client-go/rest/watch/decoder.go:49 +0x6e

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/watch.(*StreamWatcher).receive(0xc000b64580)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/watch/streamwatcher.go:104 +0x14a

created by k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/watch.NewStreamWatcher

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/watch/streamwatcher.go:71 +0xbe

goroutine 271 [sync.Cond.Wait, 2 minutes]:

runtime.goparkunlock(...)

/usr/local/go/src/runtime/proc.go:312

sync.runtime_notifyListWait(0xc0000d8300, 0x0)

/usr/local/go/src/runtime/sema.go:513 +0xf8

sync.(*Cond).Wait(0xc0000d82f0)

/usr/local/go/src/sync/cond.go:56 +0x9d

k8s.io/kubernetes/vendor/golang.org/x/net/http2.(*pipe).Read(0xc0000d82e8, 0xc0007de860, 0x4, 0x4, 0x0, 0x0, 0x0)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/golang.org/x/net/http2/pipe.go:65 +0x97

k8s.io/kubernetes/vendor/golang.org/x/net/http2.transportResponseBody.Read(0xc0000d82c0, 0xc0007de860, 0x4, 0x4, 0x0, 0x0, 0x0)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/golang.org/x/net/http2/transport.go:2108 +0xaf

io.ReadAtLeast(0x7fbd1f4dc008, 0xc0000d82c0, 0xc0007de860, 0x4, 0x4, 0x4, 0x203000, 0x203000, 0x203000)

/usr/local/go/src/io/io.go:314 +0x87

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/framer.(*lengthDelimitedFrameReader).Read(0xc000c11b20, 0xc000598000, 0x400, 0x400, 0xc00091f498, 0x7fbd1f277048, 0x38)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/framer/framer.go:76 +0x275

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/runtime/serializer/streaming.(*decoder).Decode(0xc000c26d70, 0x0, 0x1fd0b40, 0xc000c2b300, 0xc00091f5c0, 0xa, 0x2, 0x0, 0x0)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/runtime/serializer/streaming/streaming.go:77 +0x89

k8s.io/kubernetes/vendor/k8s.io/client-go/rest/watch.(*Decoder).Decode(0xc000c11b40, 0xc000595790, 0xc000438eb8, 0xc000595780, 0xc000595700, 0xc0005956e4, 0x3)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/client-go/rest/watch/decoder.go:49 +0x6e

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/watch.(*StreamWatcher).receive(0xc000c2b2c0)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/watch/streamwatcher.go:104 +0x14a

created by k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/watch.NewStreamWatcher

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/watch/streamwatcher.go:71 +0xbe

goroutine 487 [select]:

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait.BackoffUntil(0xc00070ebb0, 0x1fc48a0, 0xc000a11aa0, 0x1, 0xc00009ba40)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait/wait.go:167 +0x149

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait.JitterUntil(0xc00070ebb0, 0x6fc23ac00, 0x0, 0x1, 0xc00009ba40)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait/wait.go:133 +0x98

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait.Until(0xc00070ebb0, 0x6fc23ac00, 0xc00009ba40)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait/wait.go:90 +0x4d

created by k8s.io/kubernetes/pkg/scheduler/internal/queue.(*PriorityQueue).Run

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/pkg/scheduler/internal/queue/scheduling_queue.go:243 +0xeb

goroutine 473 [sync.Cond.Wait, 2 minutes]:

runtime.goparkunlock(...)

/usr/local/go/src/runtime/proc.go:312

sync.runtime_notifyListWait(0xc0008b22a0, 0x0)

/usr/local/go/src/runtime/sema.go:513 +0xf8

sync.(*Cond).Wait(0xc0008b2290)

/usr/local/go/src/sync/cond.go:56 +0x9d

k8s.io/kubernetes/pkg/scheduler/internal/queue.(*PriorityQueue).Pop(0xc0008b2240, 0x0, 0x0, 0x0)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/pkg/scheduler/internal/queue/scheduling_queue.go:388 +0x87

k8s.io/kubernetes/pkg/scheduler/internal/queue.MakeNextPodFunc.func1(0xc00035e000)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/pkg/scheduler/internal/queue/scheduling_queue.go:810 +0x42

k8s.io/kubernetes/pkg/scheduler.(*Scheduler).scheduleOne(0xc0004806c0, 0x200f640, 0xc00077adc0)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/pkg/scheduler/scheduler.go:442 +0x5a

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait.JitterUntilWithContext.func1()

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait/wait.go:185 +0x37

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait.BackoffUntil.func1(0xc000b1bf20)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait/wait.go:155 +0x5f

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait.BackoffUntil(0xc000949f20, 0x1fc48a0, 0xc0003a5f50, 0xc00077ad01, 0xc000726f60)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait/wait.go:156 +0xad

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait.JitterUntil(0xc000b1bf20, 0x0, 0x0, 0x18c0101, 0xc000726f60)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait/wait.go:133 +0x98

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait.JitterUntilWithContext(0x200f640, 0xc00077adc0, 0xc000b1bf80, 0x0, 0x0, 0x1)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait/wait.go:185 +0xa6

k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait.UntilWithContext(...)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/util/wait/wait.go:99

k8s.io/kubernetes/pkg/scheduler.(*Scheduler).Run(0xc0004806c0, 0x200f640, 0xc00077adc0)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/pkg/scheduler/scheduler.go:314 +0x92

k8s.io/kubernetes/cmd/kube-scheduler/app.Run.func2(0x200f640, 0xc00077adc0)

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kube-scheduler/app/server.go:202 +0x55

created by k8s.io/kubernetes/vendor/k8s.io/client-go/tools/leaderelection.(*LeaderElector).Run

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/k8s.io/client-go/tools/leaderelection/leaderelection.go:207 +0x113

goroutine 552 [runnable]:

k8s.io/kubernetes/vendor/golang.org/x/net/http2.(*Transport).getBodyWriterState.func1()

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/golang.org/x/net/http2/transport.go:2606

created by k8s.io/kubernetes/vendor/golang.org/x/net/http2.bodyWriterState.scheduleBodyWrite

/workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/golang.org/x/net/http2/transport.go:2657 +0xa6

** kubelet日志 **

W1123 01:34:14.453016 2240 empty_dir.go:520] Warning: Failed to clear quota on /var/lib/kubelet/pods/b257072c-e3a0-4301-9251-5793ff6a113d/volumes/kubernetes.io~empty-dir/tekton-internal-home: clearQuota called, but quotas disabled

I1123 01:34:14.453313 2240 operation_generator.go:797] UnmountVolume.TearDown succeeded for volume "kubernetes.io/empty-dir/b257072c-e3a0-4301-9251-5793ff6a113d-tekton-internal-home" (OuterVolumeSpecName: "tekton-internal-home") pod "b257072c-e3a0-4301-9251-5793ff6a113d" (UID: "b257072c-e3a0-4301-9251-5793ff6a113d"). InnerVolumeSpecName "tekton-internal-home". PluginName "kubernetes.io/empty-dir", VolumeGidValue ""

W1123 01:34:14.453935 2240 empty_dir.go:520] Warning: Failed to clear quota on /var/lib/kubelet/pods/b257072c-e3a0-4301-9251-5793ff6a113d/volumes/kubernetes.io~empty-dir/tekton-creds: clearQuota called, but quotas disabled

I1123 01:34:14.454088 2240 reconciler.go:196] operationExecutor.UnmountVolume started for volume "tekton-internal-tools" (UniqueName: "kubernetes.io/empty-dir/b257072c-e3a0-4301-9251-5793ff6a113d-tekton-internal-tools") pod "b257072c-e3a0-4301-9251-5793ff6a113d" (UID: "b257072c-e3a0-4301-9251-5793ff6a113d")

I1123 01:34:14.454254 2240 reconciler.go:196] operationExecutor.UnmountVolume started for volume "config" (UniqueName: "kubernetes.io/configmap/b257072c-e3a0-4301-9251-5793ff6a113d-config") pod "b257072c-e3a0-4301-9251-5793ff6a113d" (UID: "b257072c-e3a0-4301-9251-5793ff6a113d")

I1123 01:34:14.454344 2240 reconciler.go:196] operationExecutor.UnmountVolume started for volume "tekton-internal-results" (UniqueName: "kubernetes.io/empty-dir/b257072c-e3a0-4301-9251-5793ff6a113d-tekton-internal-results") pod "b257072c-e3a0-4301-9251-5793ff6a113d" (UID: "b257072c-e3a0-4301-9251-5793ff6a113d")

W1123 01:34:14.454750 2240 empty_dir.go:520] Warning: Failed to clear quota on /var/lib/kubelet/pods/b257072c-e3a0-4301-9251-5793ff6a113d/volumes/kubernetes.io~empty-dir/tekton-internal-workspace: clearQuota called, but quotas disabled

W1123 01:34:14.456325 2240 empty_dir.go:520] Warning: Failed to clear quota on /var/lib/kubelet/pods/b257072c-e3a0-4301-9251-5793ff6a113d/volumes/kubernetes.io~empty-dir/tekton-internal-results: clearQuota called, but quotas disabled

W1123 01:34:14.457188 2240 empty_dir.go:520] Warning: Failed to clear quota on /var/lib/kubelet/pods/b257072c-e3a0-4301-9251-5793ff6a113d/volumes/kubernetes.io~empty-dir/tekton-internal-tools: clearQuota called, but quotas disabled

I1123 01:34:14.457830 2240 operation_generator.go:797] UnmountVolume.TearDown succeeded for volume "kubernetes.io/empty-dir/b257072c-e3a0-4301-9251-5793ff6a113d-tekton-creds" (OuterVolumeSpecName: "tekton-creds") pod "b257072c-e3a0-4301-9251-5793ff6a113d" (UID: "b257072c-e3a0-4301-9251-5793ff6a113d"). InnerVolumeSpecName "tekton-creds". PluginName "kubernetes.io/empty-dir", VolumeGidValue ""

W1123 01:34:14.458332 2240 empty_dir.go:520] Warning: Failed to clear quota on /var/lib/kubelet/pods/b257072c-e3a0-4301-9251-5793ff6a113d/volumes/kubernetes.io~configmap/config: clearQuota called, but quotas disabled

I1123 01:34:14.459188 2240 operation_generator.go:797] UnmountVolume.TearDown succeeded for volume "kubernetes.io/configmap/b257072c-e3a0-4301-9251-5793ff6a113d-config" (OuterVolumeSpecName: "config") pod "b257072c-e3a0-4301-9251-5793ff6a113d" (UID: "b257072c-e3a0-4301-9251-5793ff6a113d"). InnerVolumeSpecName "config". PluginName "kubernetes.io/configmap", VolumeGidValue ""

I1123 01:34:14.459600 2240 operation_generator.go:797] UnmountVolume.TearDown succeeded for volume "kubernetes.io/empty-dir/b257072c-e3a0-4301-9251-5793ff6a113d-tekton-internal-results" (OuterVolumeSpecName: "tekton-internal-results") pod "b257072c-e3a0-4301-9251-5793ff6a113d" (UID: "b257072c-e3a0-4301-9251-5793ff6a113d"). InnerVolumeSpecName "tekton-internal-results". PluginName "kubernetes.io/empty-dir", VolumeGidValue ""

I1123 01:34:14.497211 2240 operation_generator.go:797] UnmountVolume.TearDown succeeded for volume "kubernetes.io/secret/b257072c-e3a0-4301-9251-5793ff6a113d-git-credential" (OuterVolumeSpecName: "git-credential") pod "b257072c-e3a0-4301-9251-5793ff6a113d" (UID: "b257072c-e3a0-4301-9251-5793ff6a113d"). InnerVolumeSpecName "git-credential". PluginName "kubernetes.io/secret", VolumeGidValue ""

I1123 01:34:14.497674 2240 operation_generator.go:797] UnmountVolume.TearDown succeeded for volume "kubernetes.io/secret/b257072c-e3a0-4301-9251-5793ff6a113d-git-v7-2-2-token-5jcv5" (OuterVolumeSpecName: "git-v7-2-2-token-5jcv5") pod "b257072c-e3a0-4301-9251-5793ff6a113d" (UID: "b257072c-e3a0-4301-9251-5793ff6a113d"). InnerVolumeSpecName "git-v7-2-2-token-5jcv5". PluginName "kubernetes.io/secret", VolumeGidValue ""

I1123 01:34:14.518593 2240 operation_generator.go:797] UnmountVolume.TearDown succeeded for volume "kubernetes.io/empty-dir/b257072c-e3a0-4301-9251-5793ff6a113d-tekton-internal-workspace" (OuterVolumeSpecName: "tekton-internal-workspace") pod "b257072c-e3a0-4301-9251-5793ff6a113d" (UID: "b257072c-e3a0-4301-9251-5793ff6a113d"). InnerVolumeSpecName "tekton-internal-workspace". PluginName "kubernetes.io/empty-dir", VolumeGidValue ""

I1123 01:34:14.556066 2240 reconciler.go:319] Volume detached for volume "tekton-internal-workspace" (UniqueName: "kubernetes.io/empty-dir/b257072c-e3a0-4301-9251-5793ff6a113d-tekton-internal-workspace") on node "rancher-03" DevicePath ""

I1123 01:34:14.556148 2240 reconciler.go:319] Volume detached for volume "config" (UniqueName: "kubernetes.io/configmap/b257072c-e3a0-4301-9251-5793ff6a113d-config") on node "rancher-03" DevicePath ""

I1123 01:34:14.556173 2240 reconciler.go:319] Volume detached for volume "tekton-internal-results" (UniqueName: "kubernetes.io/empty-dir/b257072c-e3a0-4301-9251-5793ff6a113d-tekton-internal-results") on node "rancher-03" DevicePath ""

I1123 01:34:14.556194 2240 reconciler.go:319] Volume detached for volume "tekton-internal-home" (UniqueName: "kubernetes.io/empty-dir/b257072c-e3a0-4301-9251-5793ff6a113d-tekton-internal-home") on node "rancher-03" DevicePath ""

I1123 01:34:14.556217 2240 reconciler.go:319] Volume detached for volume "git-credential" (UniqueName: "kubernetes.io/secret/b257072c-e3a0-4301-9251-5793ff6a113d-git-credential") on node "rancher-03" DevicePath ""

I1123 01:34:14.556237 2240 reconciler.go:319] Volume detached for volume "tekton-creds" (UniqueName: "kubernetes.io/empty-dir/b257072c-e3a0-4301-9251-5793ff6a113d-tekton-creds") on node "rancher-03" DevicePath ""

I1123 01:34:14.556258 2240 reconciler.go:319] Volume detached for volume "git-v7-2-2-token-5jcv5" (UniqueName: "kubernetes.io/secret/b257072c-e3a0-4301-9251-5793ff6a113d-git-v7-2-2-token-5jcv5") on node "rancher-03" DevicePath ""

I1123 01:34:14.558439 2240 operation_generator.go:797] UnmountVolume.TearDown succeeded for volume "kubernetes.io/empty-dir/b257072c-e3a0-4301-9251-5793ff6a113d-tekton-internal-tools" (OuterVolumeSpecName: "tekton-internal-tools") pod "b257072c-e3a0-4301-9251-5793ff6a113d" (UID: "b257072c-e3a0-4301-9251-5793ff6a113d"). InnerVolumeSpecName "tekton-internal-tools". PluginName "kubernetes.io/empty-dir", VolumeGidValue ""

I1123 01:34:14.656835 2240 reconciler.go:319] Volume detached for volume "tekton-internal-tools" (UniqueName: "kubernetes.io/empty-dir/b257072c-e3a0-4301-9251-5793ff6a113d-tekton-internal-tools") on node "rancher-03" DevicePath ""

I1123 01:34:16.211040 2240 kubelet_pods.go:1243] Killing unwanted pod "v7.2.2-a601e-zhdm9"

I1123 01:34:17.089911 2240 reflector.go:225] Stopping reflector *v1.ConfigMap (0s) from object-"everybim"/"v7.2.2-config-56721cbb52cd"

I1123 01:34:17.090096 2240 reflector.go:225] Stopping reflector *v1.Secret (0s) from object-"everybim"/"git-v7.2.2-token-5jcv5"

I1123 01:34:17.090228 2240 reflector.go:225] Stopping reflector *v1.Secret (0s) from object-"everybim"/"auth-n4k74"

I1123 01:34:18.372909 2240 kubelet.go:1926] SyncLoop (UPDATE, "api"): "v7.2.2-a601e-zhdm9_everybim(b257072c-e3a0-4301-9251-5793ff6a113d)"

W1123 01:34:20.256061 2240 cni.go:333] CNI failed to retrieve network namespace path: cannot find network namespace for the terminated container "dde20f5402545093c88d8fb4d9a019d3b747dd336c1c7af3b453dbe46fb0a2a8"

I1123 01:34:20.904380 2240 kubelet.go:1957] SyncLoop (PLEG): "v7.2.2-a601e-zhdm9_everybim(b257072c-e3a0-4301-9251-5793ff6a113d)", event: &pleg.PodLifecycleEvent{ID:"b257072c-e3a0-4301-9251-5793ff6a113d", Type:"ContainerDied", Data:"dde20f5402545093c88d8fb4d9a019d3b747dd336c1c7af3b453dbe46fb0a2a8"}

W1123 01:34:20.904821 2240 pod_container_deletor.go:79] Container "dde20f5402545093c88d8fb4d9a019d3b747dd336c1c7af3b453dbe46fb0a2a8" not found in pod's containers

I1123 01:34:28.568580 2240 scope.go:111] [topologymanager] RemoveContainer - Container ID: c29f006c61828f7b9fa09d3df0a75844d68c40c3f46406ee6136b4602a1ee7c4

I1123 01:34:33.844684 2240 scope.go:111] [topologymanager] RemoveContainer - Container ID: f6aaf03792c81fae6675a76c9c1b30f0e97f2cd917890b0178f7cc4acc04927a

I1123 01:34:34.149285 2240 scope.go:111] [topologymanager] RemoveContainer - Container ID: c0767cdd8319d9e80a92d876b1ddfa04f9b88688c61f5eea829f70fd7df00156

I1123 01:34:34.985699 2240 scope.go:111] [topologymanager] RemoveContainer - Container ID: 240659b66abaed8ead280c9c17df2756d37f61ebad615bbab4e9830081ea2b86

I1123 01:34:36.718415 2240 scope.go:111] [topologymanager] RemoveContainer - Container ID: 635f7166f61f5aad73dc39bd375bd455273c691662d92f90c8ed6eba4b4d34fd

I1123 01:34:37.399783 2240 scope.go:111] [topologymanager] RemoveContainer - Container ID: 0ae294541b5876fc5355fc6b9e77e230dc9ffc414793d29bb916dfb3cfba738b

I1123 01:34:37.851327 2240 scope.go:111] [topologymanager] RemoveContainer - Container ID: ea057234715d91ff34a30794ee825016bbf7e475a4711285de194d50cbb3d1c7

I1123 01:34:38.171962 2240 scope.go:111] [topologymanager] RemoveContainer - Container ID: 16d1e5e039c6afc592cbf40534c1a983cce4fd5e07435d156f60885b921f1e28

I1123 01:34:51.252910 2240 container_manager_linux.go:490] [ContainerManager]: Discovered runtime cgroups name: /system.slice/docker.service

I1123 01:39:51.254027 2240 container_manager_linux.go:490] [ContainerManager]: Discovered runtime cgroups name: /system.slice/docker.service

I1123 01:44:51.255299 2240 container_manager_linux.go:490] [ContainerManager]: Discovered runtime cgroups name: /system.slice/docker.service

W1123 01:46:19.099253 2240 conversion.go:111] Could not get instant cpu stats: cumulative stats decrease

W1123 01:46:23.334606 2240 conversion.go:111] Could not get instant cpu stats: cumulative stats decrease

W1123 01:46:29.198481 2240 conversion.go:111] Could not get instant cpu stats: cumulative stats decrease

E1123 01:46:47.596646 2240 controller.go:187] failed to update lease, error: Put "https://127.0.0.1:6443/apis/coordination.k8s.io/v1/namespaces/kube-node-lease/leases/rancher-03?timeout=10s": net/http: request canceled (Client.Timeout exceeded while awaiting headers)

E1123 01:46:52.641643 2240 controller.go:187] failed to update lease, error: Operation cannot be fulfilled on leases.coordination.k8s.io "rancher-03": the object has been modified; please apply your changes to the latest version and try again

I1123 01:49:51.256918 2240 container_manager_linux.go:490] [ContainerManager]: Discovered runtime cgroups name: /system.slice/docker.service

W1123 01:54:45.056264 2240 conversion.go:111] Could not get instant cpu stats: cumulative stats decrease

I1123 01:54:51.258743 2240 container_manager_linux.go:490] [ContainerManager]: Discovered runtime cgroups name: /system.slice/docker.service