Rancher Server 设置

- Rancher 版本:2.7.5

- 安装选项 (Docker install/Helm Chart):

- 如果是 Helm Chart 安装,需要提供 Local 集群的类型(RKE1, RKE2, k3s, EKS, 等)和版本:

- 在线或离线部署:

docker 一键安装(其他安装方式不会)

下游集群信息

- Kubernetes 版本: v1.26.6+rke2r1

- Cluster Type (Local/Downstream):

- 如果 Downstream,是什么类型的集群?(自定义/导入或为托管 等):

用户信息

- 登录用户的角色是什么? (管理员/集群所有者/集群成员/项目所有者/项目成员/自定义):

- 如果自定义,自定义权限集:

主机操作系统:

Ubuntu 22.04

问题描述:

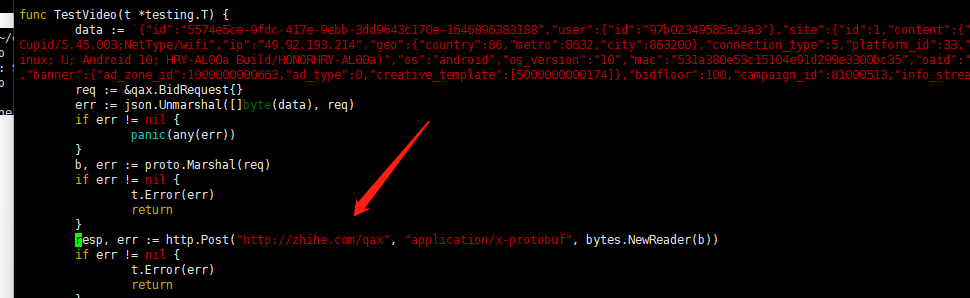

创建deployement服务a 创建service 类型为clusterip, 创建ingress 服务指向service a

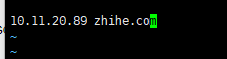

域名为zhihe.com 本机配置host 指向服务器ip,发请求给测试域名zhihe.com 服务a接收不到流量

三个配置文件如下

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: '6'

field.cattle.io/publicEndpoints: >-

[{"addresses":["10.11.20.89","10.11.20.90","10.11.20.92"],"port":80,"protocol":"HTTP","serviceName":"engine:wisdom","ingressName":"engine:wis-ingress","hostname":"zhihe.com","path":"/","allNodes":false}]

creationTimestamp: '2023-07-27T06:58:29Z'

generation: 21

labels:

app: wis-d

workload.user.cattle.io/workloadselector: apps.deployment-engine-wisdom

managedFields:

- apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

f:field.cattle.io/publicEndpoints: {}

f:labels:

.: {}

f:app: {}

f:workload.user.cattle.io/workloadselector: {}

f:spec:

f:progressDeadlineSeconds: {}

f:replicas: {}

f:revisionHistoryLimit: {}

f:selector: {}

f:strategy:

f:rollingUpdate:

.: {}

f:maxSurge: {}

f:maxUnavailable: {}

f:type: {}

f:template:

f:metadata:

f:annotations:

.: {}

f:cattle.io/timestamp: {}

f:labels:

.: {}

f:app: {}

f:workload.user.cattle.io/workloadselector: {}

f:namespace: {}

f:spec:

f:containers:

k:{"name":"container-0"}:

.: {}

f:args: {}

f:command: {}

f:image: {}

f:imagePullPolicy: {}

f:name: {}

f:ports:

.: {}

k:{"containerPort":8089,"protocol":"TCP"}:

.: {}

f:containerPort: {}

f:name: {}

f:protocol: {}

k:{"containerPort":10008,"protocol":"TCP"}:

.: {}

f:containerPort: {}

f:name: {}

f:protocol: {}

f:resources:

.: {}

f:limits:

.: {}

f:cpu: {}

f:memory: {}

f:requests:

.: {}

f:cpu: {}

f:memory: {}

f:securityContext:

.: {}

f:allowPrivilegeEscalation: {}

f:privileged: {}

f:readOnlyRootFilesystem: {}

f:runAsNonRoot: {}

f:stdin: {}

f:terminationMessagePath: {}

f:terminationMessagePolicy: {}

f:workingDir: {}

f:dnsPolicy: {}

f:restartPolicy: {}

f:schedulerName: {}

f:securityContext: {}

f:terminationGracePeriodSeconds: {}

manager: agent

operation: Update

time: '2023-07-28T02:05:29Z'

- apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:deployment.kubernetes.io/revision: {}

f:status:

f:availableReplicas: {}

f:conditions:

.: {}

k:{"type":"Available"}:

.: {}

f:lastTransitionTime: {}

f:lastUpdateTime: {}

f:message: {}

f:reason: {}

f:status: {}

f:type: {}

k:{"type":"Progressing"}:

.: {}

f:lastTransitionTime: {}

f:lastUpdateTime: {}

f:message: {}

f:reason: {}

f:status: {}

f:type: {}

f:observedGeneration: {}

f:readyReplicas: {}

f:replicas: {}

f:updatedReplicas: {}

manager: kube-controller-manager

operation: Update

subresource: status

time: '2023-07-28T02:05:29Z'

name: wisdom

namespace: engine

resourceVersion: '9147858'

uid: ba4cfbc9-d192-4a5f-8033-d83256c1382e

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

workload.user.cattle.io/workloadselector: apps.deployment-engine-wisdom

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

annotations:

cattle.io/timestamp: '2023-07-28T01:49:17Z'

creationTimestamp: null

labels:

app: wis-p

workload.user.cattle.io/workloadselector: apps.deployment-engine-wisdom

namespace: engine

spec:

containers:

- args:

- '-cluster'

- bj

command:

- /zyz/wisdom

image: bj-ssp.optaim.com/server/wisdom:202307271824

imagePullPolicy: IfNotPresent

name: container-0

ports:

- containerPort: 10008

name: wisdom-cip

protocol: TCP

- containerPort: 8089

name: wisdom-metric

protocol: TCP

resources:

limits:

cpu: '8'

memory: 8000Mi

requests:

cpu: '4'

memory: 4000Mi

securityContext:

allowPrivilegeEscalation: false

privileged: false

readOnlyRootFilesystem: false

runAsNonRoot: false

stdin: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

workingDir: /zyz

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

availableReplicas: 1

conditions:

- lastTransitionTime: '2023-07-27T06:58:56Z'

lastUpdateTime: '2023-07-27T06:58:56Z'

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: 'True'

type: Available

- lastTransitionTime: '2023-07-27T06:58:29Z'

lastUpdateTime: '2023-07-28T01:49:18Z'

message: ReplicaSet "wisdom-64fcb4545c" has successfully progressed.

reason: NewReplicaSetAvailable

status: 'True'

type: Progressing

observedGeneration: 21

readyReplicas: 1

replicas: 1

updatedReplicas: 1

apiVersion: v1

kind: Service

metadata:

annotations:

field.cattle.io/targetWorkloadIds: '["engine/wisdom"]'

management.cattle.io/ui-managed: 'true'

creationTimestamp: '2023-07-28T01:49:06Z'

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:field.cattle.io/targetWorkloadIds: {}

f:management.cattle.io/ui-managed: {}

f:ownerReferences:

.: {}

k:{"uid":"ba4cfbc9-d192-4a5f-8033-d83256c1382e"}: {}

f:spec:

f:internalTrafficPolicy: {}

f:ports:

.: {}

k:{"port":8089,"protocol":"TCP"}:

.: {}

f:name: {}

f:port: {}

f:protocol: {}

f:targetPort: {}

k:{"port":10008,"protocol":"TCP"}:

.: {}

f:name: {}

f:port: {}

f:protocol: {}

f:targetPort: {}

f:selector: {}

f:sessionAffinity: {}

f:type: {}

manager: agent

operation: Update

time: '2023-07-28T01:49:06Z'

name: wisdom

namespace: engine

ownerReferences:

- apiVersion: apps/v1

controller: true

kind: Deployment

name: wisdom

uid: ba4cfbc9-d192-4a5f-8033-d83256c1382e

resourceVersion: '9141508'

uid: a11bb92e-4512-41f2-9247-7a1d99d86e1b

spec:

clusterIP: 10.43.173.12

clusterIPs:

- 10.43.173.12

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: wisdom-cip

port: 10008

protocol: TCP

targetPort: 10008

- name: wisdom-metric

port: 8089

protocol: TCP

targetPort: 8089

selector:

workload.user.cattle.io/workloadselector: apps.deployment-engine-wisdom

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

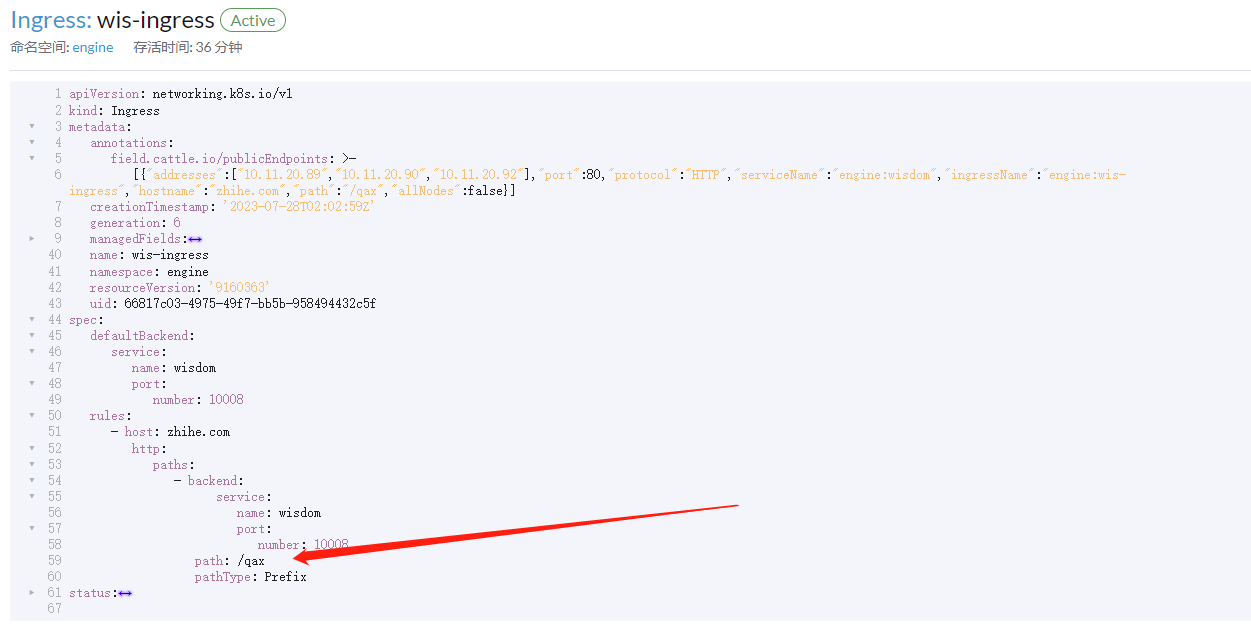

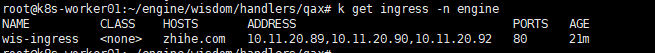

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

field.cattle.io/publicEndpoints: >-

[{"addresses":["10.11.20.89","10.11.20.90","10.11.20.92"],"port":80,"protocol":"HTTP","serviceName":"engine:wisdom","ingressName":"engine:wis-ingress","hostname":"zhihe.com","path":"/","allNodes":false}]

creationTimestamp: '2023-07-28T02:02:59Z'

generation: 5

managedFields:

- apiVersion: networking.k8s.io/v1

fieldsType: FieldsV1

fieldsV1:

f:status:

f:loadBalancer:

f:ingress: {}

manager: nginx-ingress-controller

operation: Update

subresource: status

time: '2023-07-28T02:03:55Z'

- apiVersion: networking.k8s.io/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:field.cattle.io/publicEndpoints: {}

f:spec:

f:defaultBackend:

.: {}

f:service:

.: {}

f:name: {}

f:port:

.: {}

f:number: {}

f:rules: {}

manager: agent

operation: Update

time: '2023-07-28T02:05:29Z'

name: wis-ingress

namespace: engine

resourceVersion: '9149058'

uid: 66817c03-4975-49f7-bb5b-958494432c5f

spec:

defaultBackend:

service:

name: wisdom

port:

number: 10008

rules:

- host: zhihe.com

http:

paths:

- backend:

service:

name: wisdom

port:

number: 10008

path: /

pathType: Prefix

status:

loadBalancer:

ingress:

- ip: 10.11.20.89

- ip: 10.11.20.90

- ip: 10.11.20.92

重现步骤:

结果:

预期结果:

截图:

其他上下文信息:

日志